Sora vs Gen-3 Alpha: The Battle for AI Video Supremacy

The landscape of generative AI video is currently dominated by a high-stakes, two-sided rivalry: the revolutionary world-modeling capabilities of OpenAI’s Sora and the professional, all-in-one creative suite offered by Runway’s Gen-3 Alpha. While both models represent the pinnacle of current AI video technology, they embody fundamentally different philosophies regarding the future of filmmaking. Sora aims to simulate the world with unprecedented realism and temporal coherence, while Gen-3 Alpha focuses on providing granular control, a professional workflow, and commercial safety features.

For filmmakers, content creators, and media professionals, the choice between these two is a critical decision that impacts everything from creative control to final output quality and integration into existing production pipelines. This comprehensive 2500-word analysis will dissect the core differences, compare their technical outputs, and provide a clear verdict on which platform is the superior tool for various creative workflows.

1. Core Architecture and Technical Philosophy: World Model vs. Creative Suite

The most significant difference between the two models lies in their underlying technical philosophy, which dictates their strengths and weaknesses.

Sora: The World Simulator

Sora is built on the concept of a "World Model," a large-scale diffusion transformer that learns to simulate the physical world. This architecture allows it to generate complex, long-duration scenes with a high degree of temporal consistency.

- Temporal Coherence: Sora's primary strength is its ability to maintain the identity of characters and objects, and the physics of the scene, over extended periods. This is crucial for narrative filmmaking, where continuity is paramount.

- Long-Form Generation: Sora can generate video clips up to 60 seconds long, a feat that significantly surpasses most competitors and moves AI video from short clips to true narrative segments.

- Prompt Adherence: The model excels at interpreting complex, multi-layered prompts, ensuring that the final video accurately reflects the user's detailed vision, including subtle camera movements and scene changes.

Runway Gen-3 Alpha: The Professional Toolkit

Runway’s Gen-3 Alpha, while also a powerful diffusion model, is designed as an integrated component of a professional creative suite. Its focus is not just on raw generation but on control and utility within a production environment.

- All-in-One Ecosystem: Gen-3 Alpha is seamlessly integrated with Runway’s suite of "AI Magic Tools" (e.g., Motion Brush, Inpainting, Green Screen), making it a complete post-production solution.

- Granular Control: Runway prioritizes giving the user precise control over the generated video. Features like Motion Brush allow users to dictate the movement of specific elements within a scene, a level of control essential for commercial and VFX work.

- Iterative Workflow: The model is optimized for a fast, iterative workflow, allowing creators to quickly generate, refine, and edit clips within the same platform.

The image below visually contrasts the two architectural philosophies.

.png)

A visual metaphor for two different AI video architectures. The left side shows a complex, interconnected 3D wireframe model of a world (representing Sora's World Model). The right side shows a sleek, modern toolbox with multiple specialized tools (representing Runway's All-in-One Creative Suite).

2. Output Length and Temporal Consistency: The 60-Second Barrier

The length and coherence of the generated video are perhaps the most talked-about metrics in the Sora vs Gen-3 Alpha debate.

Sora’s Narrative Potential

Sora’s ability to generate up to 60 seconds of high-definition video in a single shot is a game-changer for narrative content. This length allows for the creation of short scenes, establishing shots, and complex sequences that were previously impossible.

- Physics Simulation: Sora demonstrates a deep understanding of how objects interact with the physical world, leading to more believable and less "glitchy" motion over time.

- Scene Continuity: The model maintains scene continuity, ensuring that characters and objects do not suddenly change appearance or disappear, a common failure point for shorter-clip models.

Gen-3 Alpha’s Controlled Segments

While Runway has continuously increased its maximum clip length, its core strength remains in generating shorter, highly controlled segments (typically 4-8 seconds).

- VFX Focus: These shorter clips are ideal for visual effects (VFX) and commercial work, where a specific, controlled action or camera move is needed to be integrated into a larger, human-shot project.

- Stitching and Editing: Runway’s ecosystem is built to facilitate the stitching of these shorter clips together, relying on the user to manage the overall temporal consistency and narrative flow.

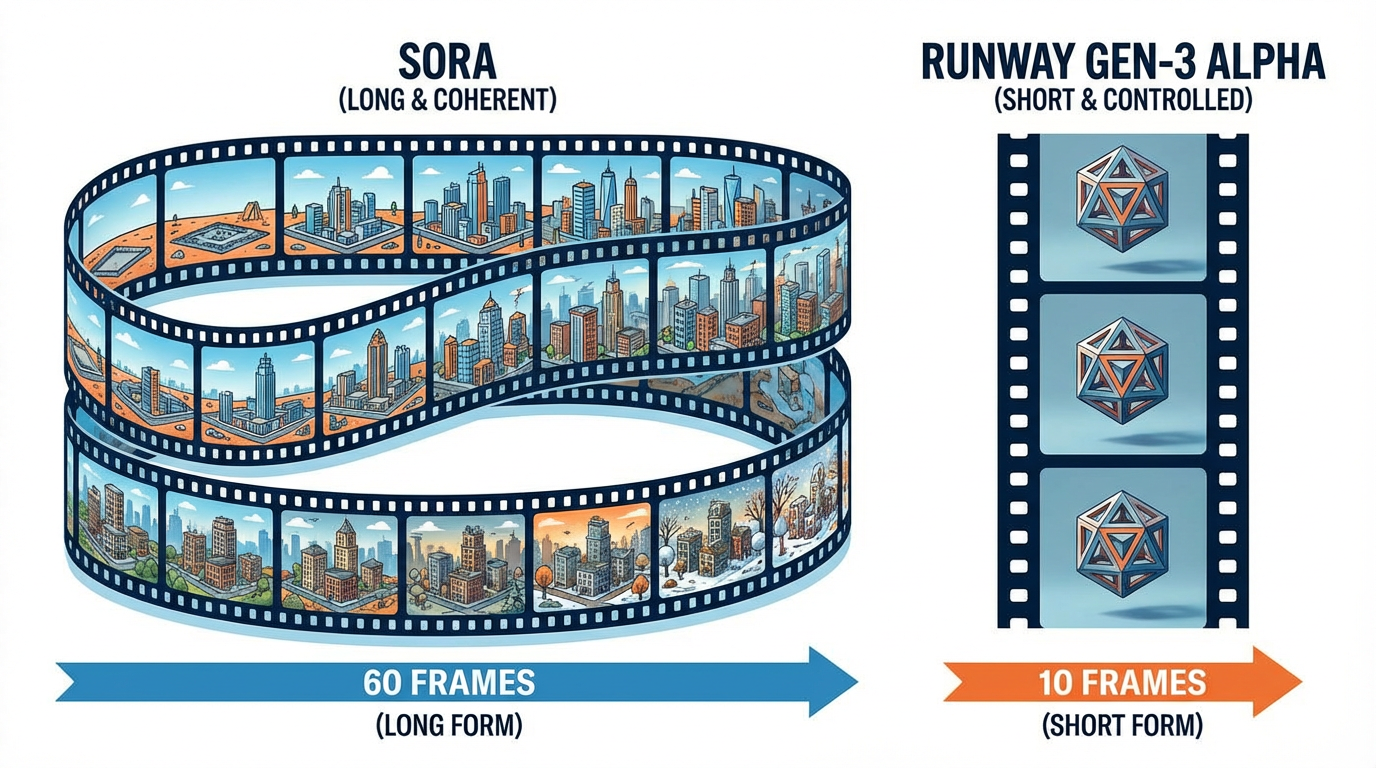

The visual comparison below highlights the difference in output length and coherence.

A visual representation of video length and coherence. The left side shows a long film strip (60 frames visible) with a complex, evolving scene that remains temporally consistent (Sora). The right side shows a short film strip (10 frames visible) with a single, perfectly controlled camera movement (Runway Gen-3 Alpha).

3. Creative Control and Workflow: Direct Manipulation vs. Prompt Refinement

The method by which a creator influences the final video is a key differentiator between Sora vs Gen-3 Alpha.

Gen-3 Alpha: Direct, Post-Generation Control

Runway’s philosophy is to empower the creator with direct, post-generation manipulation tools.

- Motion Brush: This flagship feature allows users to "paint" motion onto specific areas of a static image or video, giving them pixel-level control over movement.

- Advanced Camera Controls: Gen-3 Alpha offers precise controls for camera movements (pan, tilt, zoom, roll), which can be applied to the generated video, mimicking professional cinematography.

- Integrated Editing: The entire workflow—from generation to editing, sound design, and color grading—can be managed within the Runway platform, streamlining the production process.

Sora: Prompt-Centric Control

Sora’s control is primarily exercised through the prompt. While it is highly responsive to detailed instructions, the user has less direct, post-generation control over the clip once it is rendered.

- Prompt Engineering: Mastering Sora requires advanced prompt engineering, using descriptive language to dictate camera angles, lighting, and character actions.

- High Adherence: The model’s strength is its high adherence to the prompt, meaning the user must get the prompt right the first time. If a change is needed, the user typically re-generates the clip with a revised prompt.

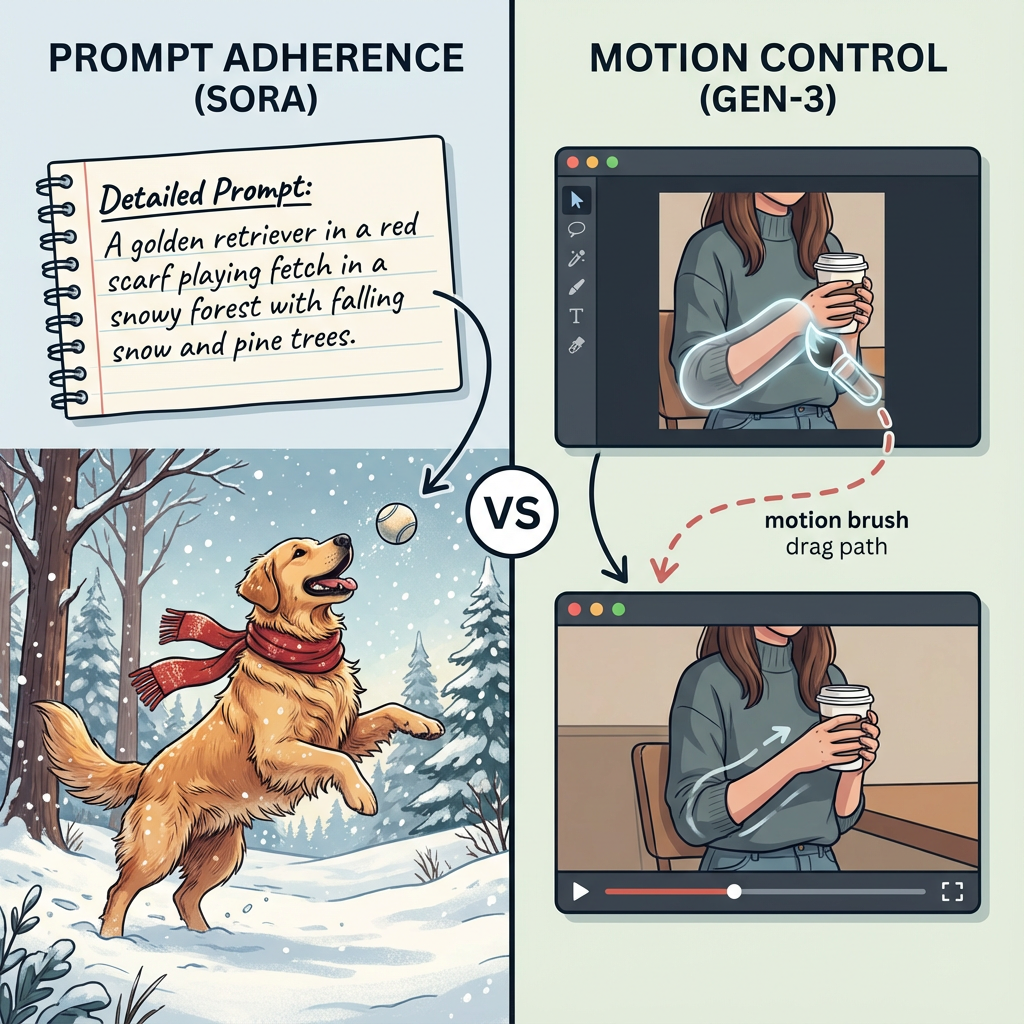

The image below visually compares the two approaches to creative control.

A visual comparing prompt adherence and control. The left side shows a handwritten, detailed prompt perfectly rendered into a complex scene (Sora's adherence). The right side shows a visual of a user dragging a motion brush over a specific area of an image, with the resulting video showing only that area moving (Gen-3's control).

4. Ecosystem and Integration: Open vs. Closed

The ecosystem surrounding the model determines its utility for different user bases.

Runway: The Closed, Professional Ecosystem

Runway is a closed, commercial platform designed to be a one-stop shop for video production.

- Creative Cloud Parallel: Runway is positioning itself as the "Creative Cloud" of AI filmmaking, offering a tightly integrated suite of tools that work seamlessly together. This is highly attractive to production houses and professional editors.

- API Access: Runway offers API access, allowing studios to integrate its generation capabilities directly into their proprietary pipelines, a key feature for enterprise clients.

Sora: The Open-Ended Platform

Sora, being an OpenAI product, is likely to be integrated into a wider, more open ecosystem, primarily through its API and partnerships.

- Third-Party Integration: Sora’s power will likely be accessed through third-party applications (like video editors or design tools) that leverage the OpenAI API. This allows for maximum flexibility but requires the user to manage multiple tools.

- Focus on Core Model: OpenAI’s focus is on the core model's capability, leaving the development of the surrounding creative tools to partners.

The image below illustrates the difference in ecosystem integration.

.png)

A side-by-side comparison of two interfaces. The left side shows a minimalist, clean interface with a single prompt box and a small OpenAI logo (Sora). The right side shows a complex, professional video editing suite interface with multiple tracks, tool icons (like Motion Brush), and the Runway logo (Gen-3 Alpha).

5. Commercial Safety and Provenance: C2PA vs. Safety Protocols

For commercial use, the legal and ethical framework of the generated content is paramount. This is an area where Sora vs Gen-3 Alpha have taken distinct approaches.

Gen-3 Alpha: Commercial-Ready Provenance

Runway has made commercial safety and provenance a core feature of Gen-3 Alpha.

- C2PA Provenance: Runway is a leader in implementing C2PA (Coalition for Content Provenance and Authenticity) standards. Every video generated by Gen-3 Alpha is watermarked with metadata that verifies its AI origin, a crucial feature for media companies concerned with authenticity and deepfakes.

- Training Data: Runway has been transparent about its training data, which is a key factor for commercial users seeking to avoid copyright infringement risks.

Sora: Robust Safety Protocols

OpenAI has implemented robust safety protocols, including an in-house visual moderation system and adversarial testing, to prevent the generation of harmful or misleading content.

- Safety-First Access: Sora’s limited access (currently only to a small group of artists and researchers) is part of a safety-first rollout strategy, allowing OpenAI to test and refine its guardrails before a wider release.

- Focus on Misinformation: OpenAI’s safety focus is heavily weighted toward preventing the creation of deepfakes and misinformation, a critical concern given the model's hyper-realistic output.

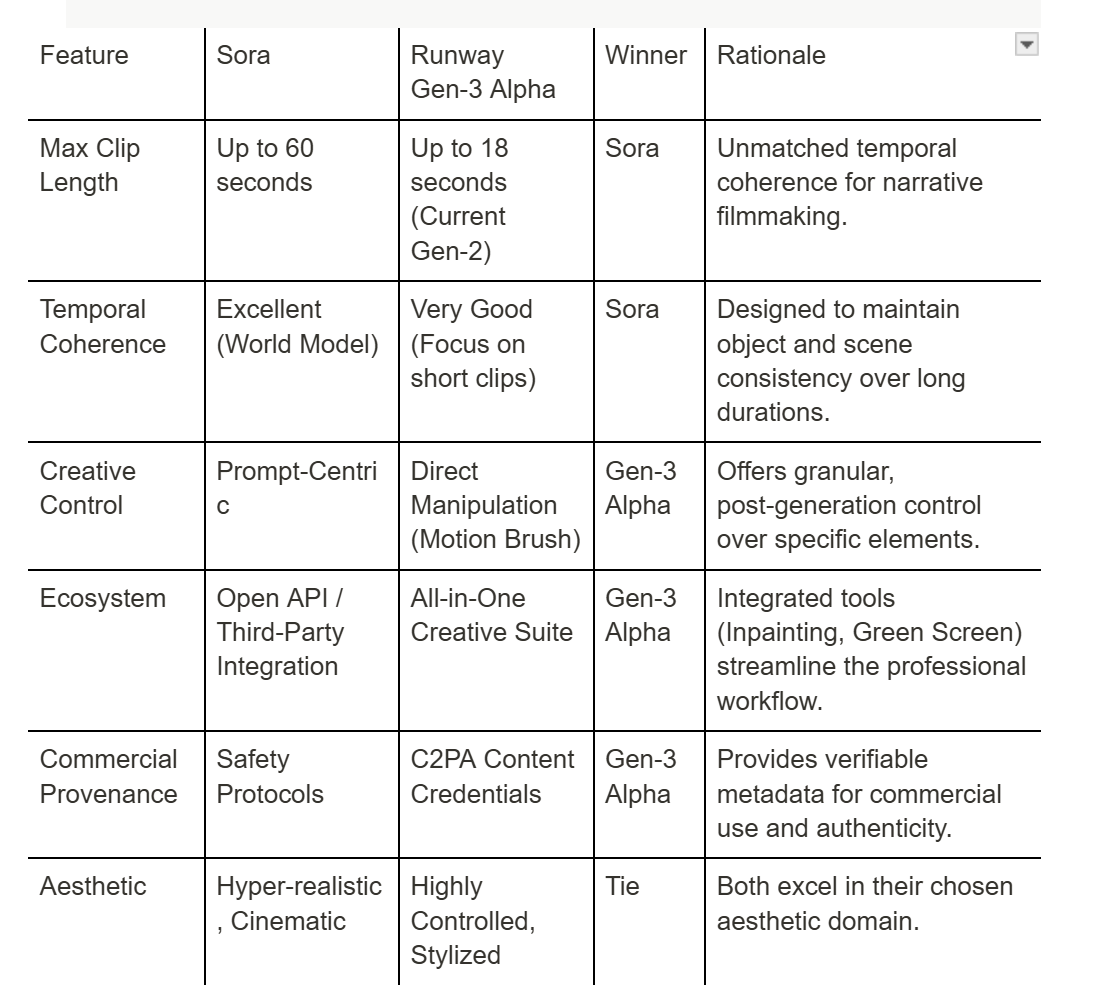

6. Feature Comparison Summary Table

To provide a clear, at-a-glance comparison, the table below summarizes the key differences between the two platforms.

7. Final Verdict: Which AI Video Generator Reigns Supreme?

The question of which model is superior—Sora vs Gen-3 Alpha—does not have a single answer; it depends entirely on the user's objective.

If your goal is to create long-form, narrative-driven content that requires unprecedented temporal coherence and realism, and you are willing to rely on advanced prompt engineering for control, Sora is the clear winner. It is the tool for the future of cinematic storytelling, acting as a true "world engine."

If your goal is to create short, highly controlled visual effects, commercial assets, or stylized content that needs to be integrated into a professional post-production pipeline with verifiable provenance, Runway Gen-3 Alpha is the superior choice. It is the tool for the modern, iterative, and commercially-focused content creator.

The competition between Sora vs Gen-3 Alpha is a powerful engine for innovation. Sora is pushing the boundaries of what AI can simulate, while Runway is pushing the boundaries of how AI can be integrated into a professional, commercial workflow. The ultimate winner is the creator, who will benefit from the rapid advancements driven by this rivalry.

8. The Future of the Rivalry: Commercialization and Ecosystem Integration

The current state of Sora vs Gen-3 Alpha is a snapshot in a rapidly moving industry. The future of this rivalry will be defined by two major factors: the timeline for commercial access and the integration of these models into broader creative ecosystems.

Commercialization Timeline and Access

Sora, as an OpenAI product, is currently in a limited research preview. Its eventual commercial release is highly anticipated but remains uncertain. The rollout will likely follow a phased approach:

- API Access: Initial access will likely be granted to developers and enterprise partners via the OpenAI API, similar to DALL-E 3 and GPT-4. This will allow third-party applications to integrate Sora's generation capabilities.

- Consumer Access: A later phase will see integration into consumer products, such as ChatGPT Plus or a dedicated OpenAI video platform. The pricing model is expected to be premium, reflecting the high computational cost of generating long, high-fidelity video.

Runway Gen-3 Alpha, conversely, is already integrated into a commercial platform. Its development is tied directly to Runway's business model, ensuring a continuous, accessible path for paying subscribers.

- Immediate Utility: Users can access Gen-3 Alpha's capabilities today through Runway's subscription tiers, making it the immediate choice for professionals who cannot wait for a future release.

- Feature Rollout: New features are rolled out iteratively to the existing user base, allowing creators to immediately incorporate the latest advancements into their work.

Ecosystem Integration: The Adobe and Google Factor

The true long-term battle will be fought over ecosystem integration. Both models are highly likely to be integrated into the dominant creative and technological platforms.

- Runway and Adobe: Runway has a strong, though not exclusive, relationship with the professional creative community that relies on Adobe products (Premiere Pro, After Effects). Gen-3 Alpha's focus on professional control and C2PA provenance makes it a natural fit for integration into the Adobe Creative Cloud workflow, potentially becoming the "AI layer" for professional video editing.

- Sora and Google/Apple: Sora's power, backed by OpenAI and Microsoft, will inevitably be integrated into the Microsoft ecosystem (e.g., Copilot, Designer). However, its core technology is so fundamental that it could also become a key component in other large platforms, potentially challenging Google's own Veo model or even being leveraged by Apple for its professional video tools.

The rivalry between Sora vs Gen-3 Alpha is therefore a proxy war between two visions: the World Model (Sora) as a foundational technology to be licensed, and the Creative Suite (Runway) as a vertically integrated platform that owns the entire workflow. For the end-user, this means a future where the best AI video tool will be the one that is most seamlessly integrated into their daily work environment. This integration, more than raw technical specs, will ultimately determine market dominance.