Sora vs Midjourney Video: The Battle for Cinematic AI Video Dominance

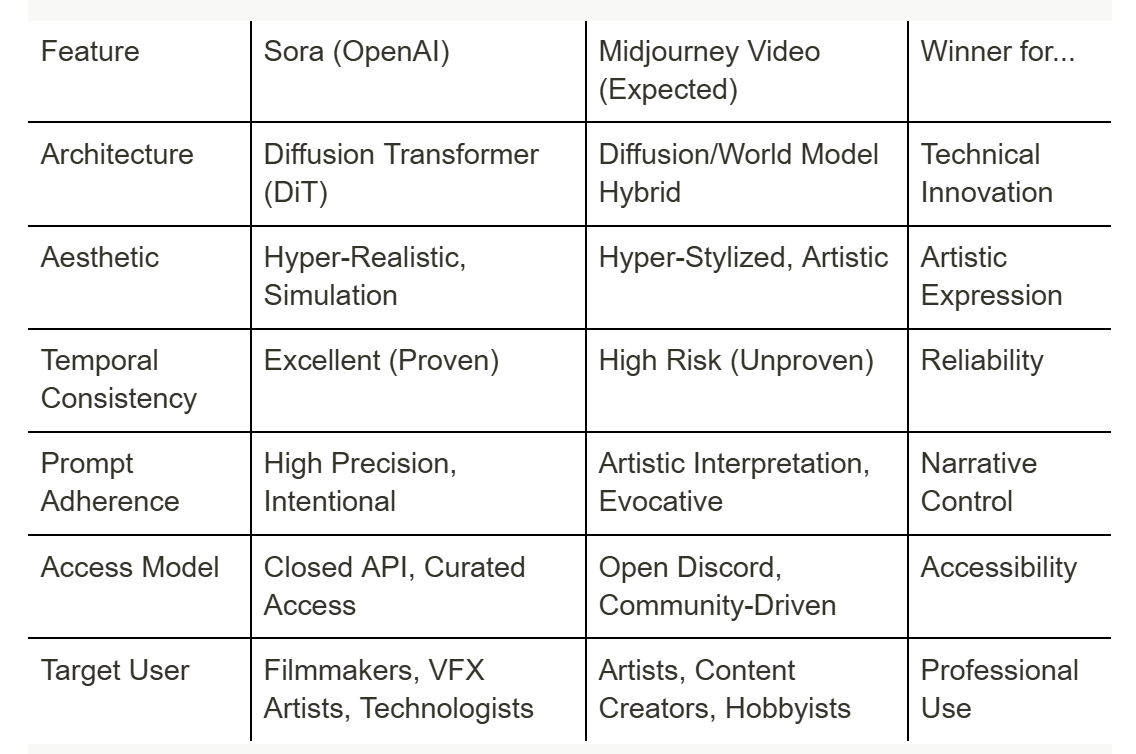

The landscape of generative AI video is defined by a fierce, high-stakes competition between two titans: OpenAI’s Sora and the highly anticipated, yet unreleased, video model from Midjourney. While Sora has captured the world’s attention with its stunning, hyper-realistic, and temporally consistent video clips, Midjourney’s reputation for producing the most artistic and cinematic still images has set an impossibly high bar for its eventual video debut. This showdown is not just a technological race; it is a clash of philosophies—the technical, world-modeling precision of OpenAI versus the artistic, community-driven vision of Midjourney.

For filmmakers, content creators, and technologists, the question is no longer if AI will revolutionize video, but which platform will define the new era of cinematic creation. This comprehensive 2500-word analysis will dissect the known capabilities of Sora against the rumored, expected, and necessary features of Midjourney Video. We will explore the fundamental differences in their underlying technical architectures, their distinct approaches to cinematic quality, prompt adherence, and the critical issue of public access. By the end, you will have a clear understanding of the current state of the art and the likely trajectory of the Sora vs Midjourney Video rivalry.

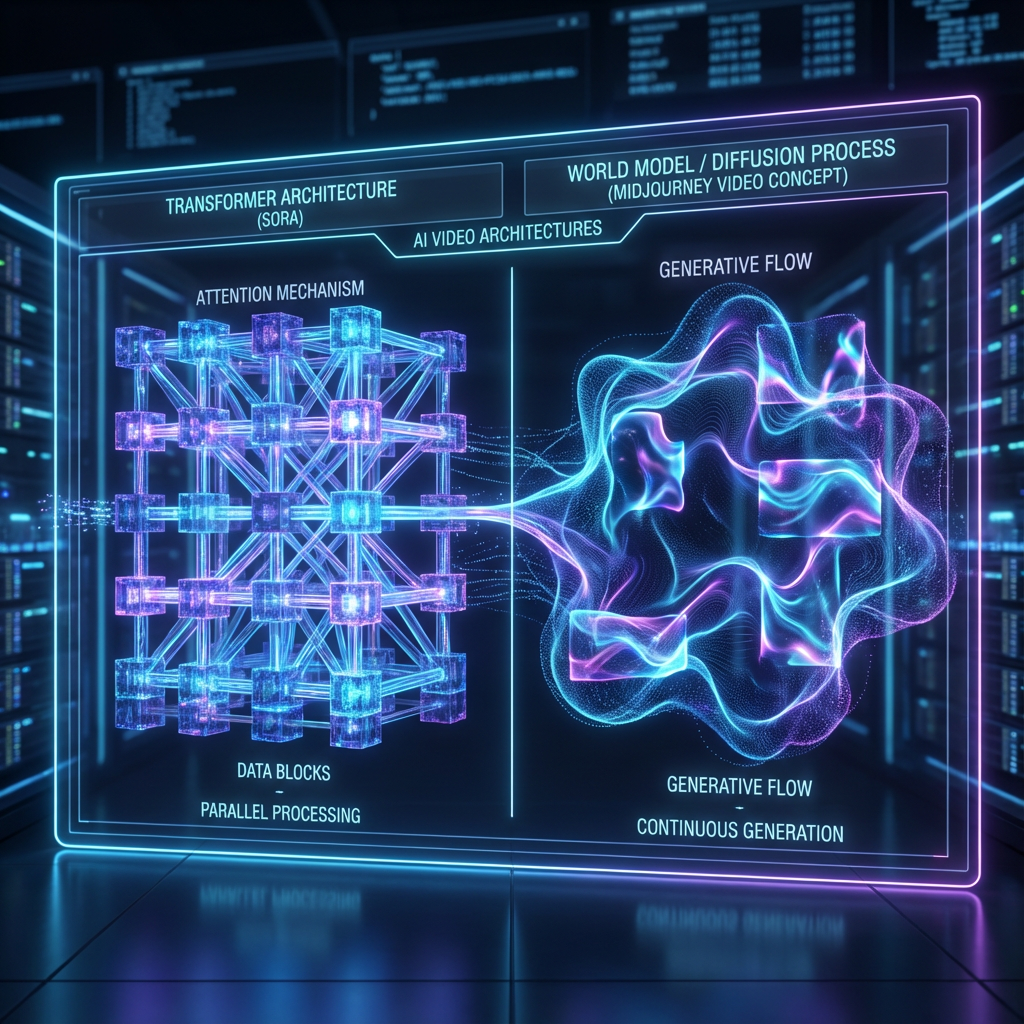

The Technical Foundation: Transformer vs. World Model

The core difference between these two platforms lies in the fundamental technology that powers them. This distinction dictates everything from the length of the generated video to its temporal consistency.

Sora: The Unified Transformer Architecture

Sora is built on a Diffusion Transformer (DiT) architecture, which treats video data as a sequence of patches (or "spacetime patches") similar to how a large language model processes text tokens.

- World Model: OpenAI refers to Sora as a "world simulator" because its transformer architecture allows it to learn the underlying physics and logic of the real world. This is what enables it to maintain object permanence, realistic interactions, and complex camera movements over long durations.

- Temporal Consistency: By processing the entire video sequence simultaneously, Sora excels at temporal consistency, ensuring that objects, lighting, and camera movements remain smooth and logical throughout the clip. This is a massive advantage over earlier models that often suffered from "flicker" or objects disappearing and reappearing.

- Scalability: The transformer architecture is inherently scalable, allowing Sora to generate videos up to a minute long in various resolutions and aspect ratios, a feat unmatched by its contemporaries.

Midjourney Video: The Expected Diffusion/World Model Hybrid

While Midjourney has not officially released its video model, its image model is based on a highly refined Diffusion Model. It is widely speculated that their video model will be a hybrid approach, combining a diffusion process for high-fidelity image quality with a temporal layer for motion.

- Artistic Fidelity: Midjourney's strength is its ability to translate artistic concepts into stunning visuals. Its video model is expected to prioritize painterly, stylized, and hyper-aesthetic output, potentially sacrificing some photorealistic accuracy for a more distinct, artistic look.

- World Model Philosophy: Midjourney's founder, David Holz, has spoken about the company's vision of creating a "world engine," suggesting a deep-learning model that understands and simulates reality, similar to Sora's underlying goal. However, their execution is likely to be driven by artistic intent rather than pure simulation.

- Potential Weakness: If Midjourney relies too heavily on a traditional diffusion architecture, it may initially struggle with the temporal consistency that Sora has mastered, leading to shorter, less complex clips.

The image below visually represents this architectural difference.

A visual metaphor for two different AI video architectures. On the left, a complex, interconnected grid of data blocks representing a Transformer model (Sora). On the right, a swirling, organic cloud of light and color representing a World Model/Diffusion process (Midjourney Video concept). Futuristic, neon blue and purple color scheme.

Cinematic Quality and Aesthetic: Hyper-Realism vs. Hyper-Stylization

The aesthetic output is where the Sora vs Midjourney Video debate becomes most subjective, yet most critical for creators.

Sora: The Hyper-Realistic Simulator

Sora's output is characterized by its commitment to photorealism. The goal is to create a video that is indistinguishable from footage shot with a high-end camera.

- Camera Work: Sora demonstrates an impressive understanding of cinematic language, including realistic depth of field, complex camera movements (dollies, cranes, pans), and accurate lighting effects.

- Detail and Fidelity: The level of detail in Sora's videos, from the texture of clothing to the reflections in water, is often cited as its most revolutionary feature. It is a tool for simulation and realism.

- Prompt Adherence: Sora's deep integration with the GPT ecosystem allows it to understand complex, multi-clause prompts with exceptional accuracy, translating intricate narrative ideas directly into video.

Midjourney Video: The Artistic Visionary

Midjourney's image model is known for its distinct, often surreal, and highly artistic aesthetic. Its video model is expected to carry this signature style forward.

- Artistic Style: Midjourney Video is likely to appeal to artists seeking a unique, stylized look—something that immediately screams "AI art" but in a beautiful, intentional way. This could include painterly textures, exaggerated colors, and surreal compositions.

- Control over Style: Just as Midjourney's image model offers extensive style parameters (like --style raw or specific version numbers), its video model is expected to give users granular control over the aesthetic, allowing for a signature look that can be applied consistently.

- The "Midjourney Look": While Sora aims for a universal, realistic look, Midjourney Video will likely aim for a proprietary, recognizable look that becomes a style in itself, much like its still images.

The image below compares the expected aesthetic output.

A side-by-side comparison of a highly detailed, cinematic scene: a futuristic city street at night with rain. The left side (Sora) is hyper-realistic with perfect lighting. The right side (Midjourney Video concept) is highly stylized, with a strong, artistic, painterly aesthetic. Both are 16:9 aspect ratio.

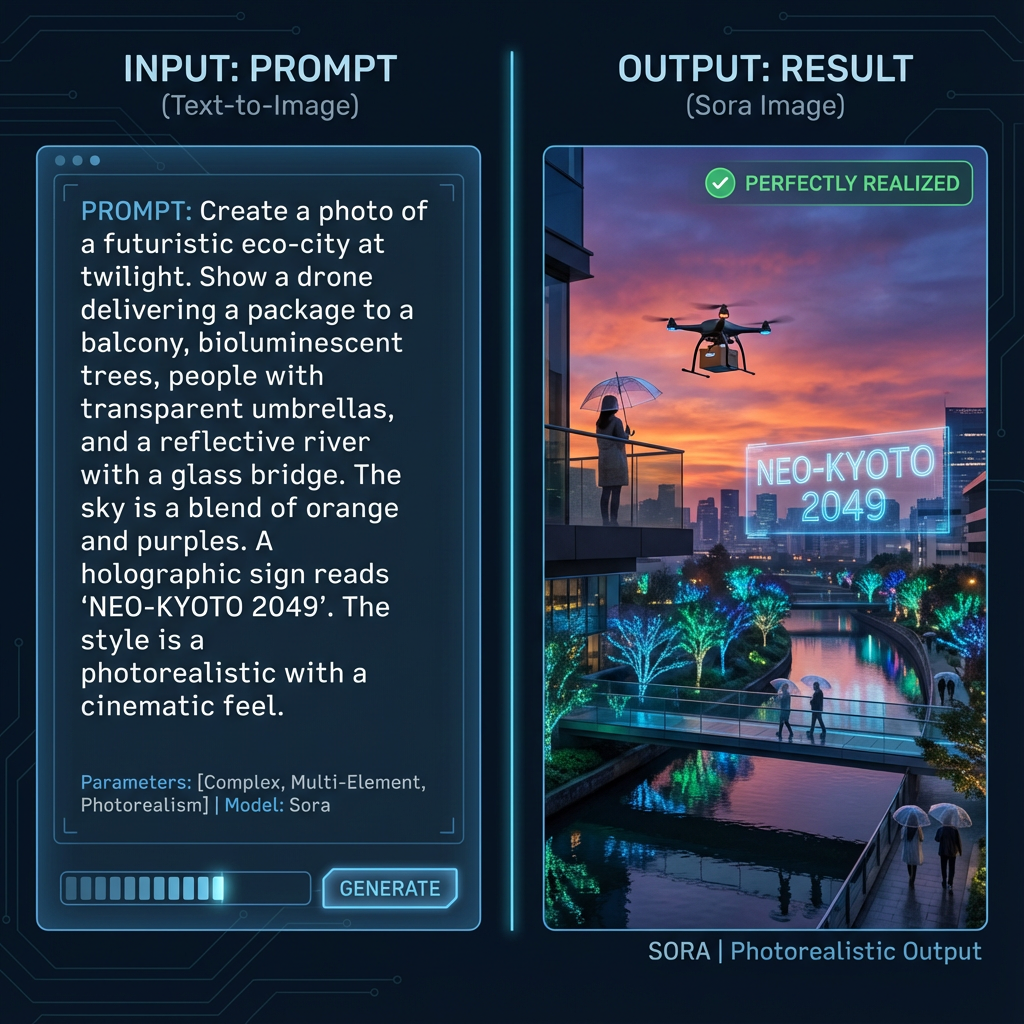

Prompt Adherence and Control: Intentionality vs. Interpretation

The way a model interprets a user's prompt is crucial for professional workflow.

Sora: Precision and Intentionality

Sora's strength lies in its ability to follow complex, long-form prompts, thanks to its transformer foundation.

- Complex Narratives: Users can describe a scene, a character, an action, and a camera movement, and Sora can often execute all elements simultaneously, demonstrating a deep understanding of the prompt's intent.

- Text in Video: Sora has shown a remarkable ability to generate legible text within a video, a long-standing challenge for generative models.

- Prompt Rewriting: Sora does not typically rewrite the user's prompt; it executes the prompt as written, offering a direct line of control from the creator's mind to the final output.

Midjourney Video: Artistic Interpretation

Midjourney's image model is famous for its "artistic interpretation," often taking a prompt and elevating it with unexpected, beautiful details.

- Creative License: Midjourney Video is expected to continue this trend, offering a more collaborative experience where the AI acts as a creative partner, interpreting the prompt to maximize artistic impact.

- Short-Form Prompts: Midjourney's Discord-based workflow favors shorter, more evocative prompts, relying on the model's internal aesthetic engine to fill in the gaps.

- Control via Parameters: Control is often achieved through a vast array of parameters (e.g., aspect ratio, chaos, stylization) rather than through detailed, descriptive text.

The image below illustrates Sora's prompt adherence.

A visual showing a complex, multi-element text prompt being perfectly realized. A split image where the left side shows a detailed text box (the prompt) and the right side shows the resulting image with every element present and correct (Sora). Clean, UI-focused design.

Access, Community, and Ecosystem: Closed API vs. Open Discord

The user experience and accessibility are fundamentally different, reflecting the corporate structures behind the models.

Sora: Closed Access and API Integration

Sora is currently in a closed testing phase, accessible only to a select group of researchers, artists, and filmmakers.

- Access Model: The long-term plan is likely a controlled API access model, similar to GPT-4, integrated into platforms like ChatGPT and potentially third-party applications. This ensures quality control and monetizes the immense computational cost.

- Ecosystem: Sora is part of the larger OpenAI ecosystem, meaning it will integrate seamlessly with other OpenAI tools like GPT-4 for prompt generation and DALL-E 3 for image assets.

- Community: The community is currently small and curated, focused on professional feedback and technical stress-testing.

Midjourney Video: Community-Driven and Discord-Native

Midjourney's success is built on its vibrant, public, and collaborative Discord community.

- Access Model: Midjourney Video is expected to launch directly into the Discord environment, offering public access through a subscription model. This fosters a culture of sharing, learning, and rapid iteration.

- Ecosystem: Midjourney's ecosystem is centered around its Discord bot and web gallery, with a focus on image-to-image and image-to-video workflows.

- Community: The community is massive, active, and drives innovation through shared prompts, style discoveries, and collaborative projects. This open environment is a key differentiator in the Sora vs Midjourney Video rivalry.

The image below compares the two access models.

A visual comparing two different access workflows. On the left, a single, locked API key icon with a small, exclusive group of people (Sora's closed access). On the right, a large, open Discord server icon with a crowd of users collaborating (Midjourney's community-driven access). Modern, flat design.

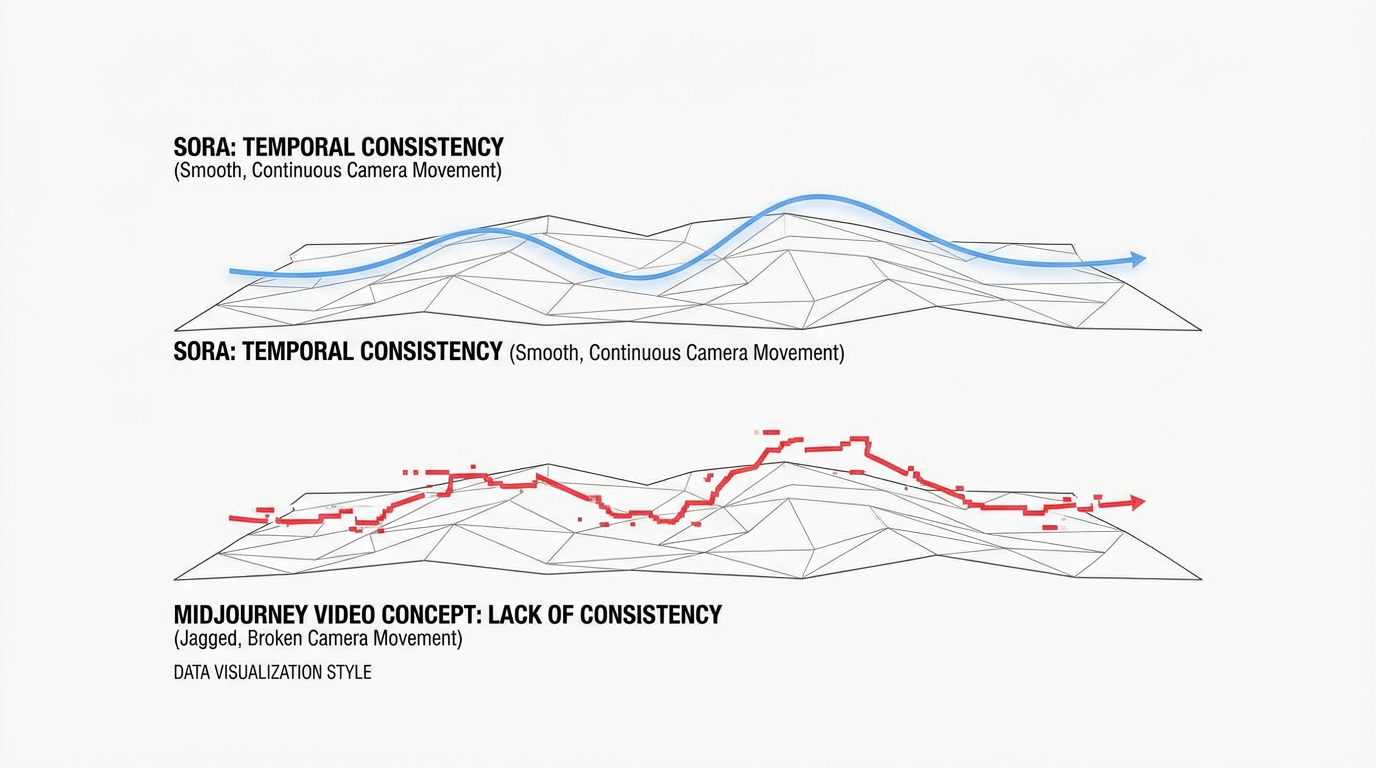

The Temporal Consistency Challenge

Temporal consistency—the ability of a video model to maintain the identity of characters and objects, and the logic of motion over time—is the single most difficult technical hurdle in AI video generation.

Sora's Mastery of Time

Sora's transformer architecture has demonstrated a breakthrough in this area.

- Object Permanence: In Sora's videos, objects do not randomly disappear or change form, and characters maintain their appearance and clothing throughout the clip, even when moving off-screen and returning.

- Realistic Physics: The model appears to have learned basic physics, such as gravity, momentum, and collision, allowing for highly realistic interactions between elements in the scene.

- Long-Form Coherence: The ability to generate a minute-long video with a consistent narrative and visual style is a direct result of its temporal coherence.

Midjourney Video's Expected Hurdle

While Midjourney's image quality is superb, translating that quality into a temporally consistent video is a massive challenge.

- Image-to-Video Limitations: Earlier image-to-video models often struggled with temporal consistency, leading to a "wobbly" or "flickering" effect. Midjourney will need to overcome this with a dedicated temporal module.

- The "World Engine" Solution: If Midjourney's "world engine" vision is realized, it will likely solve this problem by simulating the scene's physics, much like Sora. However, achieving this level of simulation while maintaining Midjourney's signature artistic style is a complex balancing act.

The image below illustrates the concept of temporal consistency.

A visual representation of temporal consistency in video. A long, smooth, continuous line representing a camera movement across a landscape (Sora). Below it, a similar line with small, jagged breaks or glitches, representing a lack of temporal consistency (Midjourney Video concept). Minimalist, data visualization style.

Ethical and Commercial Implications: Training Data, Copyright, and Deepfakes

The power of these generative models brings with it significant ethical and commercial responsibilities. The debate around training data, copyright, and the potential for misuse is a crucial aspect of the Sora vs Midjourney Video comparison.

Training Data and Copyright

The source of the data used to train these massive models is a central point of contention, particularly for professional artists and content creators.

- Sora's Training Data: OpenAI has been deliberately vague about the exact composition of Sora's training data, stating only that it was trained on "publicly available data and licensed data." This ambiguity has led to concerns from the creative community regarding the inclusion of copyrighted material. However, OpenAI's association with Microsoft and its deep pockets suggest a potential for large-scale licensing agreements in the future.

- Midjourney's Training Data: Midjourney has also faced scrutiny over its training data, which is widely believed to include a vast amount of scraped internet imagery. While the company has taken steps to allow artists to opt-out of future training sets, the legal status of past training remains a complex, unresolved issue. Midjourney's community-driven nature, however, has fostered a culture where the ethical use of AI is a constant, public discussion.

The Deepfake and Misinformation Challenge

The hyper-realistic output of models like Sora presents an unprecedented challenge for verifying the authenticity of video content.

- Sora's Threat Level: Sora's ability to generate minute-long, photorealistic, and temporally consistent videos makes it the most potent tool for creating convincing deepfakes and spreading misinformation. OpenAI has acknowledged this risk and has stated they are working on detection classifiers and watermarking techniques to identify Sora-generated content.

- Midjourney Video's Mitigating Factor: If Midjourney Video leans into its expected artistic, stylized aesthetic, its output may be less suitable for creating convincing deepfakes. The inherent "Midjourney look" could act as a natural, stylistic watermark, making it easier for viewers to identify the content as AI-generated art rather than fake reality.

Commercialization and Monetization

The business models reflect the different philosophies of the parent companies.

- OpenAI's Enterprise Focus: Sora is clearly aimed at the high-end, enterprise, and professional market. Its likely API-based monetization will focus on high-volume, high-value use cases in film, advertising, and game development. The cost will be high, reflecting the immense computational resources required.

- Midjourney's Creator Focus: Midjourney's model is built around the individual creator, offering tiered subscription plans that are highly accessible. Its monetization focuses on the volume of users and the community's willingness to pay for a superior artistic tool. This model is more democratizing, putting powerful tools into the hands of millions.

Conclusion: The Future of Cinematic AI

The rivalry between Sora vs Midjourney Video is the most exciting development in the creative technology space. It is a battle between two distinct visions for the future of filmmaking.

For the professional filmmaker or VFX artist who requires unprecedented realism, long-form coherence, and technical precision, Sora is the current and likely future standard. Its ability to simulate the real world with such fidelity makes it an indispensable tool for pre-visualization and complex scene generation.

For the artist or content creator who prioritizes unique, stunning aesthetics, artistic control, and a collaborative community, Midjourney Video is the platform to watch. Its eventual release is expected to democratize high-end artistic video generation, just as its image model did for still art.

The ultimate outcome is not a single winner, but a clear segmentation of the market. Sora will define the standard for realism and technical simulation, while Midjourney Video will define the standard for artistic vision and community-driven creation. The competition between these two models will only accelerate the pace of innovation, pushing the boundaries of what is possible in cinematic AI.