Sora vs Runway: Which AI Video Generator Reigns Supreme in 2025?

The landscape of generative AI video is evolving at a breakneck pace, transforming from a niche technology into a fundamental tool for creators, marketers, and filmmakers. At the forefront of this revolution stand two titans: OpenAI's Sora and Runway. While Sora burst onto the scene with jaw-dropping, photorealistic clips, Runway has been the incumbent, a pioneer that has consistently iterated with powerful tools like Gen-4. The question on every creator's mind is no longer if AI video is ready, but which platform offers the superior experience and results. This comprehensive analysis dives deep into the capabilities, costs, and creative potential of both platforms to determine, in 2025, which AI video generator truly reigns supreme.

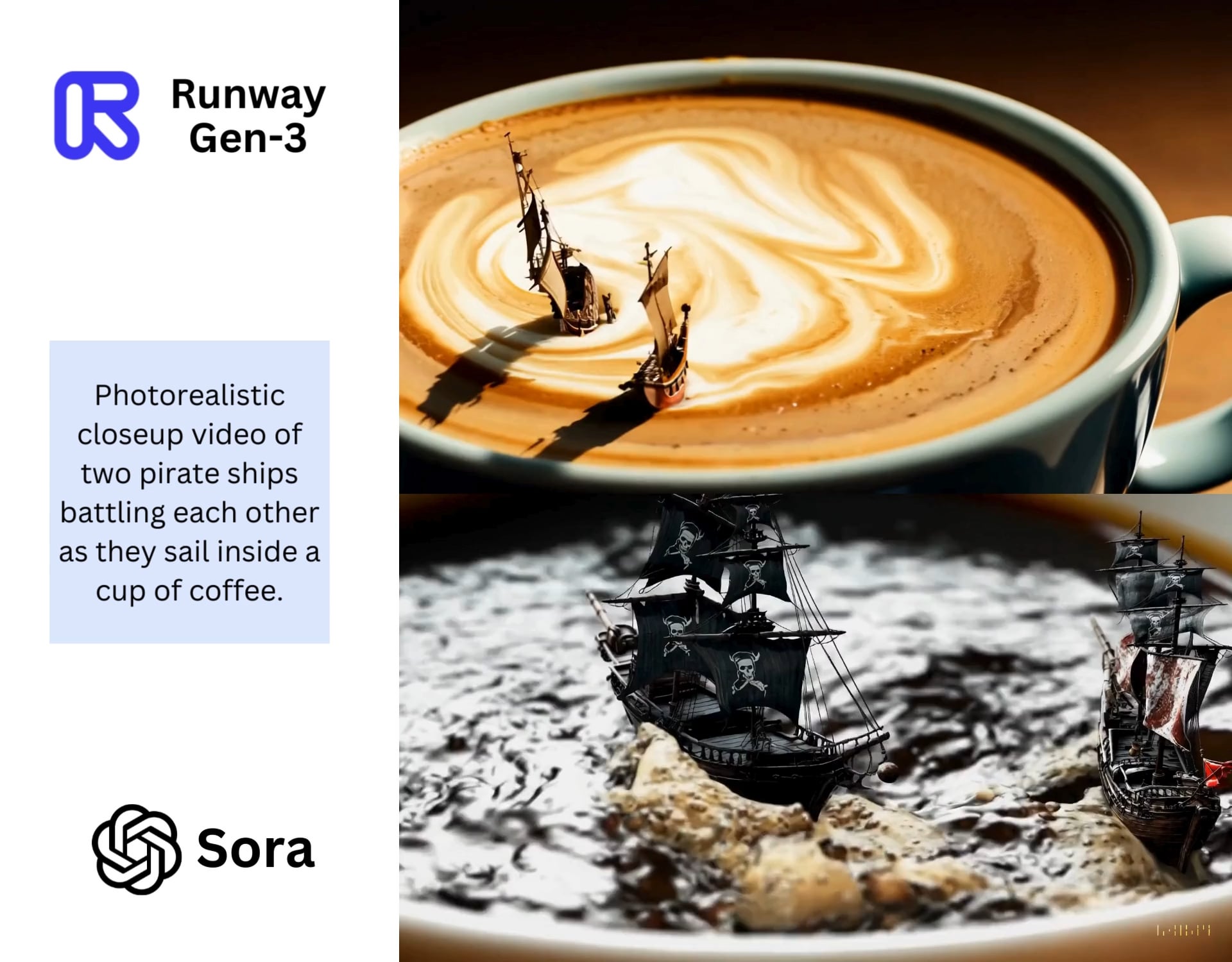

The core of this debate, the very essence of Sora vs Runway, lies in a comparison of two distinct philosophies. Sora, developed by the research-focused OpenAI, emphasizes raw, unbridled photorealism and physics-defying imagination, often operating as a black box that delivers stunning results with minimal user input. Runway, on the other hand, has built a comprehensive creative suite, offering a full spectrum of control and editing tools that integrate seamlessly into existing filmmaking workflows. Understanding this fundamental difference is the first step in choosing the right tool for your project.

Sora vs Runway Features: A Deep Dive into Creative Control

When comparing the feature sets of these two platforms, it becomes clear that they are designed for slightly different user bases. Sora's primary feature is its unparalleled text-to-video generation capability. It excels at creating complex, high-fidelity scenes with multiple characters, specific types of motion, and accurate rendering of objects and environments. Its ability to maintain object permanence and simulate real-world physics over longer clips is a technological marvel.

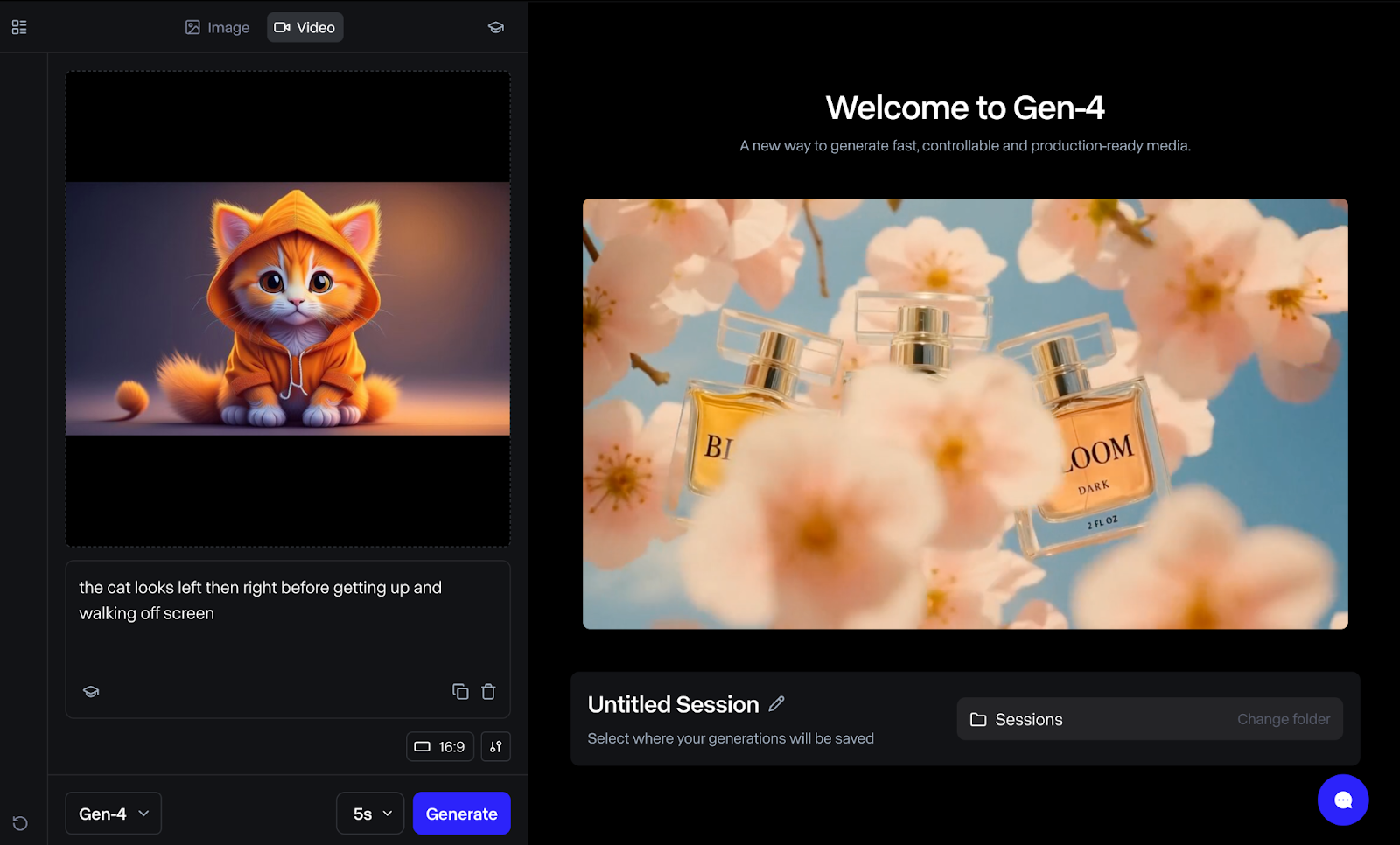

Runway, with its Gen-4 model, offers a more diverse and integrated suite of tools. While its text-to-video is highly competitive, its strength lies in its multi-modal capabilities and its commitment to the creative workflow.

Runway’s suite includes tools like Motion Brush, which allows users to paint over specific areas of a video and direct their movement, and Director Mode, which enables greater control over camera movement and composition. These features are critical for filmmakers who need to maintain consistency and artistic vision across a project. Furthermore, Runway's platform is a true creative hub, offering green screen removal, inpainting, and frame-by-frame editing, making it a one-stop shop for AI-assisted post-production.

Sora, by contrast, is currently a more focused tool. Its power is concentrated in the initial generation phase. The quality of the output is so high that it often requires less post-production, but the user has less granular control over the final result. For a quick, stunning, and highly realistic shot, Sora is unmatched. For a complex scene that requires precise adjustments and integration with other footage, Runway’s comprehensive toolset provides a significant advantage.

Sora vs Runway Quality: The Cinematic Benchmark

The most talked-about aspect of the Sora vs Runway debate is the sheer quality of the generated video. When Sora’s initial examples were released, they set a new, almost unbelievable standard for photorealism.

The Photorealism of Sora

Sora’s videos are characterized by an almost uncanny level of detail, lighting, and texture. It demonstrates a deep understanding of the physical world, rendering complex interactions like reflections, shadows, and fluid dynamics with remarkable accuracy. The model’s ability to generate long, coherent scenes with consistent characters and environments is what truly distinguishes it. This is not just a technological leap; it is a creative one, allowing for narrative possibilities that were previously impossible with generative AI. The quality is so high that many of the initial clips were indistinguishable from real-world footage, prompting deep discussions about the future of visual media 1.

Runway’s Iterative Excellence

Runway has not stood still. With the release of Gen-4, and its subsequent Turbo model, Runway has significantly closed the gap. Runway's strength lies in its versatility and its ability to handle a wider range of artistic styles, from hyper-realistic to highly stylized and abstract. While Sora might win on pure, unprompted photorealism, Runway often wins on prompt adherence and stylistic flexibility.

For a filmmaker, the ability to generate a video that perfectly matches a specific aesthetic—be it a grainy 1970s film look or a vibrant, cel-shaded animation—is often more valuable than raw photorealism. Runway’s models are trained on a vast and diverse dataset, allowing for a broader creative palette. Furthermore, Runway’s iterative development cycle means that its models are constantly improving based on real-world user feedback, a crucial factor for a production-ready tool.

Sora Cinematic Example A high-fidelity example of Sora's photorealistic output, showcasing its advanced understanding of lighting and texture.

Sora vs Runway Pricing: Cost-Effectiveness for Creators

The financial model is a critical factor for any professional creator or studio. The comparison of Sora vs Runway pricing is complex because the two companies have fundamentally different approaches to monetization and access.

Runway’s Transparent, Credit-Based System

Runway operates on a clear, credit-based subscription model. This model is highly transparent and scalable, making it easy for users to budget their projects.

The key advantage of Runway’s pricing is the Unlimited Plan, which provides a predictable, high-volume workflow for professional studios. While Gen-4 generations still consume credits, the overall cost efficiency for a high-volume user is excellent. The free tier is also a major draw, allowing anyone to experiment with the technology before committing financially. You can explore the full details of their current plans on the official Runway pricing page 2.

Sora’s Access Model: A Research-First Approach

Sora’s pricing model is less defined and more tied to the broader OpenAI ecosystem. At the time of this writing, Sora is primarily accessible through a limited research preview, or as an integrated feature within higher-tier OpenAI subscriptions (like ChatGPT Plus or Enterprise).

•Access: Primarily through a waitlist or high-tier subscription.

•Cost: Indirectly tied to the monthly cost of an OpenAI subscription (e.g., $20/month for ChatGPT Plus, which may include limited Sora access).

•Monetization: OpenAI's long-term strategy is likely to be a high-value, API-based model, where the cost per second of video will be significantly higher than current market rates, reflecting the superior quality and computational cost.

For the average creator, Runway offers a more accessible and financially predictable path. For a large studio or a company with an existing OpenAI Enterprise relationship, the integration and raw quality of Sora may justify the higher, less transparent cost. The long-term cost-effectiveness of Sora vs Runway will ultimately depend on how quickly Sora moves from a research tool to a widely available commercial product.

Sora vs Runway for Filmmakers: Control, Workflow, and Integration

For professional filmmakers, the choice between these two tools is not just about the final image quality; it is about how the tool fits into a demanding, high-stakes production pipeline. The fan-out query Sora vs Runway for filmmakers is perhaps the most crucial comparison, as it addresses the practical realities of production.

Runway: The Filmmaker’s Toolkit

Runway was built by filmmakers, for filmmakers, and this is evident in its design philosophy.

1.Integrated Workflow: Runway is a cloud-based video editor and creative suite. Filmmakers can upload existing footage, use the AI tools (like Gen-4, inpainting, and motion tracking) to enhance or alter it, and then export the finished clips. This seamless integration means less time spent moving files between different software packages.

2.Control and Iteration: The granular control tools (Motion Brush, Director Mode) allow filmmakers to iterate on a shot until it perfectly matches the storyboard. This level of artistic control is non-negotiable in professional production.

3.Customization: Runway allows for custom model training, enabling a studio to train the AI on their specific assets, characters, or visual style. This is a massive advantage for maintaining brand consistency or a unique cinematic look across a series or film.

Runway Gen-4 Interface A screenshot of the Runway Gen-4 interface, highlighting its integrated tools and user-friendly design for creative professionals.

Sora: The Visionary Concept Artist

Sora’s role in the filmmaking pipeline is currently more akin to a visionary concept artist or a digital matte painter.

1.Concept and Pre-Visualization: Sora’s ability to generate incredibly detailed, long-form scenes makes it an unparalleled tool for pre-visualization. A director can quickly generate a 60-second clip to communicate a complex visual idea to a cinematographer or VFX team, saving countless hours in traditional pre-production.

2.Digital Matte Painting and Set Extension: For shots that require massive, impossible environments, Sora can generate the base footage with a level of realism that is hard to achieve with traditional VFX.

3.Limitations in Control: The primary hurdle for Sora in a professional workflow is the lack of granular control. If a generated clip is 95% perfect but needs a slight adjustment to a character's position or a camera move, the filmmaker must rely on a new prompt, which may result in a completely different, albeit equally stunning, clip. This lack of deterministic control can be a major bottleneck in a tight production schedule.

For a filmmaker, the ideal scenario is likely a hybrid approach: using Sora for initial concept generation and highly realistic, complex shots, and then using Runway’s comprehensive suite for the bulk of the production, editing, and fine-tuning.

The Technological Underpinnings: Diffusion vs. World Models

To truly appreciate the difference between these two giants, one must look at the underlying technology. Both Sora and Runway utilize variations of the Diffusion Model architecture, which involves gradually refining noise into a coherent image or video. However, the scale and specific implementation differ significantly.

Runway’s Gen-4, while highly advanced, is built upon a more traditional generative framework that has been continuously optimized. It excels at generating short, high-quality clips and providing the tools to manipulate them.

Sora, on the other hand, is built on the concept of a "World Model." OpenAI has stated that Sora is trained not just to generate pixels, but to understand and simulate the physical world. It processes video data as "patches"—small, uniform units of data—which allows it to handle a massive variety of resolutions, durations, and aspect ratios. This "patch" approach, combined with its vast training data, allows Sora to model the underlying physics of a scene, leading to the superior object permanence and temporal consistency that has stunned the industry. This is a fundamental difference: Runway is a master of video generation, while Sora is attempting to master the simulation of reality itself.

The Future of AI Video: Sora’s Potential vs. Runway’s Practicality

The ongoing evolution of Sora vs Runway is a microcosm of the broader AI industry: the race between groundbreaking research and practical, production-ready tools.

Sora’s Trajectory: The Unstoppable Force

Sora’s potential is limitless. If OpenAI can successfully commercialize the model and integrate more control features, it could become the default tool for high-end, photorealistic video generation. The ability to generate a minute-long, cinematic scene from a single prompt is a game-changer for everything from advertising to feature film pre-production. The next steps for Sora will likely involve:

•API Access: Opening up the model to developers and studios via a robust API.

•Control Mechanisms: Introducing features similar to Runway’s Motion Brush or Director Mode to give users more deterministic control over the output.

•Longer Clips: Extending the maximum duration of generated video to facilitate full scene creation.

Runway’s Trajectory: The Creative Ecosystem

Runway’s strategy is to solidify its position as the creative ecosystem for AI-assisted filmmaking. They are not just building a model; they are building a platform. Their future development will likely focus on:

•Real-Time Generation: Reducing the generation time to near-instantaneous, making the tool feel more like a traditional editor.

•Deeper Editing Tools: Integrating more advanced, AI-powered editing features that go beyond simple generation, such as automated color grading, sound design, and complex VFX integration.

•Community and Collaboration: Building out collaborative features that allow teams to work on projects together within the Runway environment.

Sora vs Runway Comparison A visual comparison of the output styles and interfaces of Sora and Runway, illustrating the difference between the two approaches.

Conclusion: Which AI Video Generator Reigns Supreme?

To answer the central question—Sora vs Runway: Which AI Video Generator Reigns Supreme in 2025?—the answer is nuanced and depends entirely on the user's needs.

For the creator whose primary need is unparalleled photorealism, long-form coherence, and cutting-edge visual fidelity, Sora is the reigning champion. Its "World Model" approach has set a new benchmark for what is possible in generative AI. It is the tool for generating the most stunning, high-impact, and complex shots that require minimal post-production.

For the professional filmmaker, agency, or high-volume content creator who requires a full suite of integrated tools, predictable pricing, and granular control over the creative process, Runway is the superior platform. Its commitment to the creative workflow, its transparent pricing, and its powerful control features make it the more practical and production-ready choice.

In the short term, Runway is the more accessible and versatile tool for the majority of creators. In the long term, Sora represents the future of the technology, and its eventual widespread release will force every competitor to raise their game. The true winner is the creator, who now has two incredibly powerful tools to choose from, each pushing the boundaries of digital storytelling.

Runway Gen-4 Image-to-Video An example of Runway's Image-to-Video feature, a key tool for filmmakers looking to animate static assets.

Sora Output Example A high-resolution example of a complex scene generated by Sora, demonstrating its ability to handle multiple subjects and detailed environments.

The competition between these two platforms is driving innovation faster than anyone predicted. As a creator, staying informed about the latest updates from both OpenAI and Runway is essential. For further reading on the technical aspects of these models, the official OpenAI research paper provides a fascinating look into the "World Model" concept 3. For those looking to integrate AI video into their marketing strategy, a detailed guide on using generative AI for advertising is available on the Marketing AI Institute website 4. Finally, to keep up with the latest advancements in the broader AI video landscape, the Generative AI News portal is an excellent resource 5.