Stable Diffusion 3 vs DALL-E 3: The Ultimate AI Image Generator Comparison

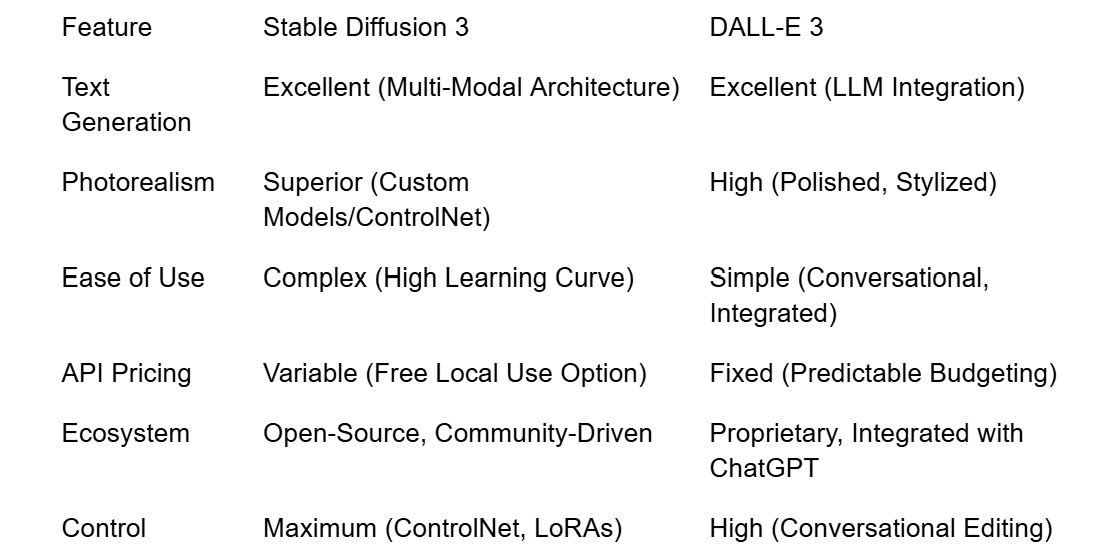

The world of generative AI image creation has been fundamentally reshaped by two titans: Stability AI’s Stable Diffusion 3 (SD3) and OpenAI’s DALL-E 3. These models represent the pinnacle of current technology, each claiming superiority in different domains. For artists, developers, and businesses, the choice between them is a strategic decision that impacts everything from creative control to budget and intellectual property.

The comparison of Stable Diffusion 3 vs DALL-E 3 is a deep dive into the philosophical divide of AI development: the open-source, community-driven model versus the proprietary, polished, and integrated ecosystem. This 3000-word analysis will meticulously compare their performance across critical metrics, including text generation, photorealism, API pricing, and the long-term implications of their respective development models, to provide the definitive guide for 2025.

The Core Battleground: Text Generation Accuracy

Historically, a major weakness of all AI image generators was their inability to render accurate, legible text within an image. Both SD3 and DALL-E 3 have made this a central focus of their development, leading to a direct and fierce competition in the fan-out query Stable Diffusion 3 vs DALL-E 3 text generation accuracy.

DALL-E 3’s Integrated Advantage

DALL-E 3’s strength in text generation stems from its deep integration with the ChatGPT Large Language Model (LLM).

•Prompt Refinement: The LLM acts as an intermediary, automatically refining the user’s simple prompt into a highly detailed, structured prompt for the image model. This ensures that the model receives clear, unambiguous instructions for the text it needs to render.

•Contextual Understanding: DALL-E 3 excels at placing text contextually within a scene (e.g., a sign on a building, a logo on a t-shirt) with high fidelity, making it a powerful tool for mockups and concept art.

Stable Diffusion 3’s Technical Leap

Stable Diffusion 3 achieved its breakthrough in text generation through a novel architecture that uses a new type of text encoder.

•Multi-Modal Architecture: SD3 utilizes a multi-modal approach, combining three different text encoders (including T5) to better understand the semantic meaning of the prompt. This allows it to handle complex, multi-clause prompts and long strings of text with remarkable accuracy.

•Open-Source Iteration: While DALL-E 3 is closed, the open-source nature of SD3 means that the community can rapidly develop extensions and fine-tuned models (LoRAs) specifically to enhance text rendering, leading to a faster pace of improvement in this area.

Verdict on Text Generation: While DALL-E 3 was the initial leader, Stable Diffusion 3 has effectively closed the gap and, in some complex scenarios, offers superior performance due to its multi-modal architecture and the rapid iteration of the open-source community.

Image 1: Stable Diffusion 3 vs DALL-E 3 Text Comparison A side-by-side comparison of images generated by SD3 and DALL-E 3 using a prompt that requires complex, legible text rendering, highlighting the strengths of each model.

The Pursuit of Realism: Photorealism and Artistic Style

The fan-out query Stable Diffusion 3 vs DALL-E 3 photorealism comparison is a key differentiator for professional photographers, advertisers, and artists seeking to create images indistinguishable from reality.

Stable Diffusion 3: The Photorealism Champion

Stable Diffusion, particularly with its vast ecosystem of fine-tuned models, has long been the gold standard for photorealism. SD3 continues this tradition.

•Custom Models (Checkpoints): The open-source community has created thousands of highly specialized models (often called "checkpoints") trained exclusively on photorealistic data. This allows users to achieve hyper-realistic results that mimic specific camera lenses, film stocks, and lighting conditions.

•Granular Control: SD3's advanced control parameters (samplers, steps, CFG scale, ControlNet) give the user total control over the final image, allowing for the fine-tuning necessary to achieve true photorealism.

DALL-E 3: The Polished, Artistic Look

DALL-E 3, while capable of photorealism, often defaults to a highly polished, slightly stylized, and "AI-perfect" aesthetic.

•Consistent Style: DALL-E 3 is excellent at maintaining a consistent artistic style across multiple generations, which is valuable for branding and concept art. However, this consistency can sometimes make the images feel less "raw" or "authentic" than those produced by a fine-tuned SD3 model.

•Ease of Use: Achieving photorealism in DALL-E 3 is simpler—often requiring just the word "photorealistic" in the prompt—but the user has less control over the subtle details that define true realism, such as depth of field or film grain.

Verdict on Photorealism: For out-of-the-box simplicity, DALL-E 3 is effective. However, for the highest level of detail, control, and the ability to mimic specific photographic styles, Stable Diffusion 3 remains the superior tool due to its open ecosystem and advanced control features.

Image 2: Photorealism Comparison A side-by-side comparison of photorealistic human faces generated by various models, including SD3 and DALL-E 3, to highlight the subtle differences in skin texture, lighting, and detail.

The Business Decision: API Pricing and Commercial Use

For developers and businesses, the fan-out query Stable Diffusion 3 vs DALL-E 3 API pricing and commercial use is a critical factor in determining the long-term cost and scalability of their AI integration.

DALL-E 3: Predictable, All-Inclusive Cost

OpenAI's API pricing for DALL-E 3 is straightforward and based on the image size and quality.

•Predictable Cost: The cost per image is fixed (e.g., $0.04 per image), making it easy for businesses to budget their API usage.

•Commercial Rights: OpenAI's terms are clear: users own the images they create and can use them commercially, provided they adhere to the content policy. This clarity is a major advantage for legal and compliance teams.

•Integration: DALL-E 3 is tightly integrated with the broader OpenAI ecosystem (ChatGPT, GPT-4), which simplifies the development of applications that require both text and image generation.

Stable Diffusion 3: Variable Cost and Licensing Complexity

Stable Diffusion 3's pricing is more complex, offering a trade-off between free use and paid API access.

•Free Local Use: The core SD3 model is open-source, meaning that businesses with the necessary hardware can generate images for free, offering a massive cost advantage for high-volume internal use.

•API Pricing: Stability AI offers a competitive API, but the cost can vary depending on the model size and the number of steps used.

•Licensing Nuance: While the core SD3 model is open-source, the licensing for the vast ecosystem of community-created models (LoRAs, checkpoints) can be ambiguous or restrictive, posing a legal risk for commercial use.

Verdict on Commercial Use: For simple, legally safe, and predictable API integration, DALL-E 3 is the safer choice. For businesses with in-house AI expertise and high-volume needs, the cost savings and control offered by running Stable Diffusion 3 locally or on a dedicated cloud instance are unparalleled.

The Philosophical Divide: Open-Source vs. Proprietary

The fan-out query Stable Diffusion 3 open-source vs DALL-E 3 proprietary model debate is the most fundamental difference between the two platforms, impacting everything from innovation speed to ethical considerations.

Stable Diffusion 3: The Open Ecosystem

Stable Diffusion 3 is the flagship of the open-source movement in generative AI.

•Rapid Innovation: The open-source nature allows thousands of developers worldwide to inspect, modify, and improve the model. This leads to an explosion of innovation, with new features, models, and tools being released at a pace that a single company cannot match.

•Transparency and Auditability: The model's weights and architecture are public, allowing for greater transparency and ethical auditing. Researchers can study the model for bias and safety issues, which is crucial for the long-term health of the AI community.

•Customization: The ability to fine-tune the model on private data is a massive advantage for businesses and artists who need a highly specialized tool.

DALL-E 3: The Polished, Walled Garden

DALL-E 3 operates within a proprietary, closed ecosystem.

•Safety and Guardrails: OpenAI maintains strict control over the model, allowing them to implement strong safety guardrails and content filters. This ensures a more predictable and "safe" user experience, especially for the general public.

•Integrated Experience: The closed nature allows for the seamless, polished integration with ChatGPT, which is DALL-E 3's greatest strength in terms of user experience.

•Lack of Transparency: The model's inner workings are a black box, which limits external auditing and customization. Users are entirely dependent on OpenAI for updates, features, and ethical standards.

Verdict on Philosophy: The choice here is a matter of principle. Stable Diffusion 3 champions freedom, customization, and community-driven innovation. DALL-E 3 champions simplicity, safety, and a polished, integrated user experience.

Advanced Features and Workflow Integration

For the professional user, the comparison extends beyond the core generation quality to the advanced features that streamline the creative workflow.

Stable Diffusion 3’s ControlNet and LoRAs

Stable Diffusion 3's ecosystem is defined by its extensions.

•ControlNet: This is a game-changing feature that allows users to guide the generation process using structural inputs like depth maps, edge detection, and human poses. This gives the user deterministic control over the composition, a feature DALL-E 3 cannot currently match.

•LoRAs (Low-Rank Adaptation): These small, fine-tuned models allow users to inject specific styles, characters, or objects into the generation process without retraining the entire model. This is the ultimate tool for brand consistency and character design.

DALL-E 3’s Inpainting and Conversational Editing

DALL-E 3’s advanced features are focused on conversational editing.

•Inpainting/Outpainting: DALL-E 3 allows users to select an area of an image and ask the model to modify it or expand the image beyond its original borders, all through natural language prompts.

•Iterative Refinement: The ability to simply say "make the subject look happier" or "change the background to a desert" and have the model understand and execute the command is a massive time-saver for rapid prototyping.

Conclusion: The Ultimate AI Image Generator Comparison

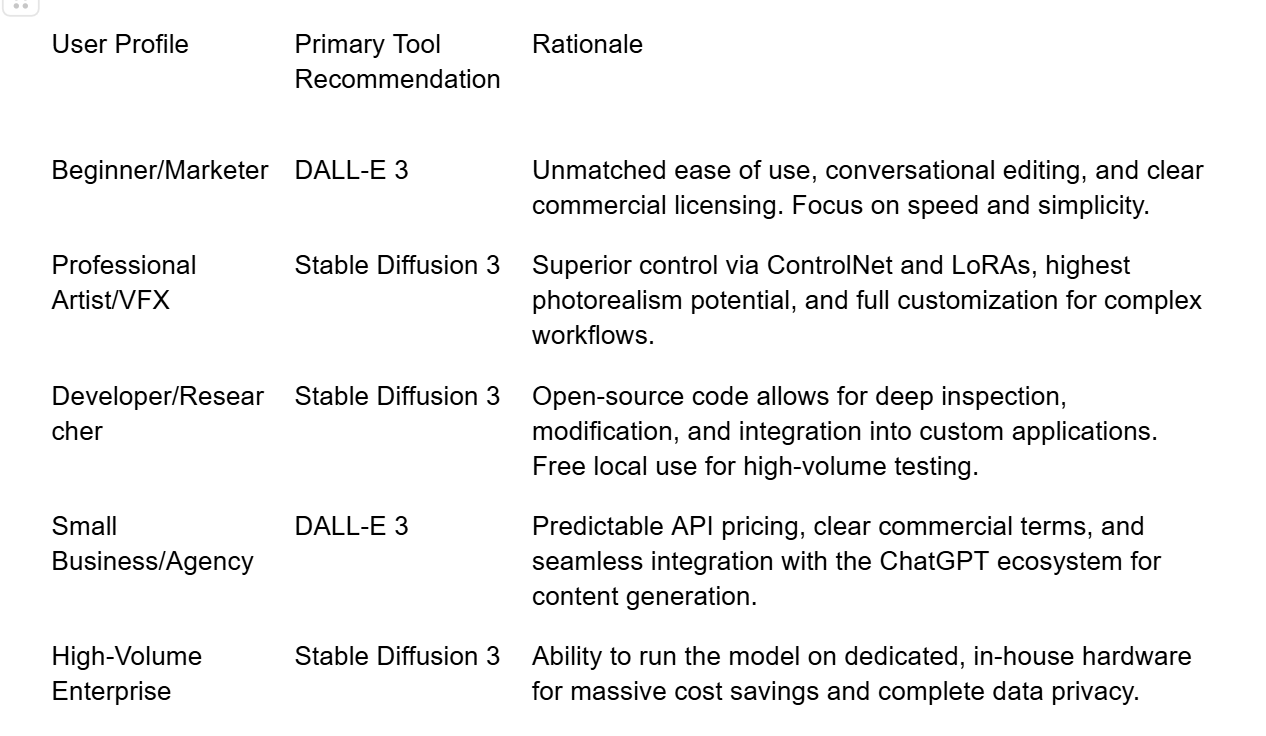

The battle between Stable Diffusion 3 vs DALL-E 3 is a microcosm of the entire AI industry. Both models are technological marvels, but they serve different masters.

For the developer, the power user, and the artist who demands ultimate control and customization, Stable Diffusion 3 is the clear winner. Its open-source nature and the vast ecosystem of tools offer a level of creative freedom that is unmatched.

For the general user, the marketer, and the business seeking simplicity, legal clarity, and a polished, integrated experience, DALL-E 3 is the superior choice. Its seamless integration with ChatGPT makes it the most accessible and user-friendly tool on the market.

The ultimate winner is the creator who leverages the strengths of both. Use DALL-E 3 for quick, text-accurate mockups, and then use Stable Diffusion 3 for the final, highly-controlled, photorealistic output.

Deep Dive into Advanced Workflow Integration

For professional artists and production studios, the true value of an AI image generator is measured by its ability to integrate seamlessly into a complex workflow. This is where the open-source flexibility of Stable Diffusion 3 and the polished, integrated experience of DALL-E 3 diverge most significantly.

Stable Diffusion 3: The Modular Powerhouse

The strength of Stable Diffusion 3 lies in its modularity, which is facilitated by the open-source community. This modularity allows for a degree of workflow customization that is simply impossible in a closed system.

ControlNet: Deterministic Composition

ControlNet is arguably the most significant innovation in the Stable Diffusion ecosystem. It is a neural network structure that allows users to input an image that dictates the composition, pose, or depth of the final generated image.

•Pose Control: A user can upload a simple stick figure drawing or a photograph of a person in a specific pose, and ControlNet will ensure the generated image's subject adopts that exact pose. This is revolutionary for character artists, illustrators, and comic book creators who need to maintain consistency across a series of images.

•Depth and Edge Maps: By using depth maps or canny edge detection maps derived from existing photographs, artists can generate entirely new images that retain the precise spatial arrangement and lighting of the original scene. This transforms the AI from a random generator into a powerful, deterministic tool for concept art and architectural visualization.

LoRAs and Checkpoints: Infinite Customization

The ability to use LoRAs (Low-Rank Adaptation) and custom checkpoints is the lifeblood of the Stable Diffusion community. These are small files that can be loaded onto the base SD3 model to inject specific knowledge or artistic styles.

•Brand Consistency: A company can train a LoRA on its own product photography or brand assets, ensuring that every image generated by the AI adheres to its specific style guide, color palette, and product design.

•Niche Styles: Artists can download LoRAs fine-tuned for hyper-specific styles, such as "Ghibli-esque watercolor" or "1980s cyberpunk neon," giving them access to an unparalleled library of artistic voices.

DALL-E 3: The Conversational Editor

DALL-E 3's workflow integration is focused on speed and conversational iteration, making it a perfect tool for rapid prototyping and non-technical users.

Conversational Inpainting and Outpainting

While Stable Diffusion requires the user to manually mask areas for inpainting (modifying a part of the image) or use complex scripts for outpainting (expanding the image borders), DALL-E 3 handles these tasks through simple conversation.

•Ease of Use: A user can simply say, "Change the red car in the foreground to a blue bicycle," and DALL-E 3 will execute the command. This removes the need for external editing software for minor revisions and dramatically speeds up the ideation phase.

•Contextual Awareness: Because the LLM understands the context of the image and the user's request, it can often make more intelligent, contextually appropriate edits than a purely technical masking tool.

Integration with the OpenAI Ecosystem

DALL-E 3's integration with the broader OpenAI suite is a powerful workflow advantage. Users can seamlessly move from a text-based brainstorming session in ChatGPT to image generation, and then use the generated image as a visual reference for a new text prompt, all within the same interface. This unified experience is a significant draw for users who rely heavily on the OpenAI platform for multiple tasks.

Ethical and Safety Implications: The Open vs. Closed Debate

The fan-out query Stable Diffusion 3 open-source vs DALL-E 3 proprietary model debate has profound ethical and safety implications that must be considered, especially for a 3000-word comprehensive comparison.

DALL-E 3: Centralized Safety and Guardrails

OpenAI's proprietary model allows for centralized control over safety and content moderation.

•Content Filtering: DALL-E 3 has robust, non-negotiable guardrails that prevent the generation of harmful, illegal, or non-consensual content. This is a crucial feature for corporate users and public-facing applications.

•Bias Mitigation: OpenAI can continuously monitor and update the model to mitigate biases in the training data, ensuring that the generated images are diverse and representative.

•Transparency Challenge: The "black box" nature of the model means that while the guardrails are effective, the public cannot independently verify how they work or audit the model for subtle biases that may still exist.

Stable Diffusion 3: Decentralized Ethics and Freedom

Stable Diffusion 3's open-source nature presents a double-edged sword regarding ethics and safety.

•Unrestricted Freedom: The core model can be downloaded and run locally without any of the guardrails imposed by Stability AI. This grants users complete creative freedom, but it also means the model can be used to generate deepfakes, harmful content, or other material that violates ethical guidelines.

•Community Responsibility: The responsibility for ethical use is decentralized, falling on the individual user and the developers of the various front-ends (like Automatic1111 or ComfyUI). This requires a higher degree of personal responsibility from the user base.

•Academic Auditability: The open nature is a massive benefit for academic research. Scientists can study the model's inner workings, identify the sources of bias, and develop new, transparent methods for safety and ethical content generation. This is a vital contribution to the long-term, responsible development of AI.

The Final Verdict: A Strategic Choice for 2025

The ultimate choice between Stable Diffusion 3 vs DALL-E 3 in 2025 is not about a single winner, but about a strategic alignment with your goals, budget, and philosophical stance on AI.

Open-source code allows for deep inspection, modification, and integration into custom applications. Free local use for high-volume.

Ability to run the model on dedicated, in-house hardware for massive cost savings and complete data privacy.

The future of AI image generation is a dynamic ecosystem where both models will continue to thrive. Stable Diffusion 3 will remain the engine of innovation and the choice for those who demand ultimate control. DALL-E 3 will remain the most accessible, polished, and user-friendly gateway to AI art for the masses. The most successful creators will be those who master the strengths of both.