Stable Diffusion vs Midjourney: Open Source Power vs Proprietary Polish

Stable Diffusion vs Midjourney: Open Source Power vs Proprietary Polish

The world of generative AI art is dominated by two distinct philosophies, each represented by a colossal platform: Midjourney and Stable Diffusion. On one side, you have Midjourney, a proprietary, polished, and community-driven service that consistently delivers stunning, artistic results with minimal effort. On the other, you have Stable Diffusion, an open-source powerhouse that offers unparalleled control, customization, and the freedom to run locally, but demands a steeper learning curve. The choice between these two is not merely a preference for one tool over another; it is a decision between a streamlined, high-end service and a customizable, community-driven ecosystem.

For creators, developers, and businesses entering the text-to-image space, understanding the fundamental differences between these two platforms is crucial. This comprehensive guide will dissect the core features, performance metrics, and philosophical underpinnings of both to help you determine which platform is the right fit for your creative journey in 2025. The ultimate question remains: which is the superior tool, and how does the debate of Stable Diffusion vs Midjourney shape the future of AI art?

The Core Philosophy: Open Source Power vs Proprietary Polish

The most significant difference between the two platforms is their underlying model and distribution strategy. This difference dictates everything from user experience to creative control and commercial rights.

Stable Diffusion: The Open-Source Ecosystem

Stable Diffusion, developed by Stability AI, is an open-source model. This means the underlying code and model weights are publicly available, allowing anyone to download, modify, and run the software on their own hardware. This open-source nature has fostered an explosive ecosystem of innovation, leading to thousands of custom models, user interfaces (like Automatic1111 and ComfyUI), and extensions.

•Freedom and Control: Users have complete control over the generation process, including the ability to fine-tune models on their own data, use advanced control mechanisms like ControlNet, and generate images without any censorship or content filters (subject to local laws).

•Community and Customization: The community has created an enormous library of Stable Diffusion alternatives and specialized models (known as checkpoints or LoRAs) for specific styles, such as anime, photorealism, or architectural visualization. This level of customization is unmatched by any proprietary tool.

•Cost: Once the initial hardware investment is made, the cost of generation is essentially free, making it highly cost-effective for high-volume users.

Midjourney: The Proprietary, Hosted Service

Midjourney is a closed, proprietary service. The model is not publicly available, and all generations are performed on Midjourney’s dedicated servers, primarily accessed through a web interface or the Discord application.

•Polish and Consistency: Midjourney’s models are meticulously trained and tuned to produce images with a distinct, highly polished, and aesthetically pleasing style. It is renowned for its ability to generate high-quality, artistic images with minimal prompting effort.

•Ease of Use: The user experience is highly streamlined. The web interface is clean and intuitive, and the Discord bot requires only simple commands, making it incredibly accessible for beginners.

•Artistic Bias: Midjourney has a strong, inherent artistic bias that often elevates simple prompts into stunning works of art. While this is a strength for general artistic output, it can be a limitation for users who require precise, literal control over every element of the image.

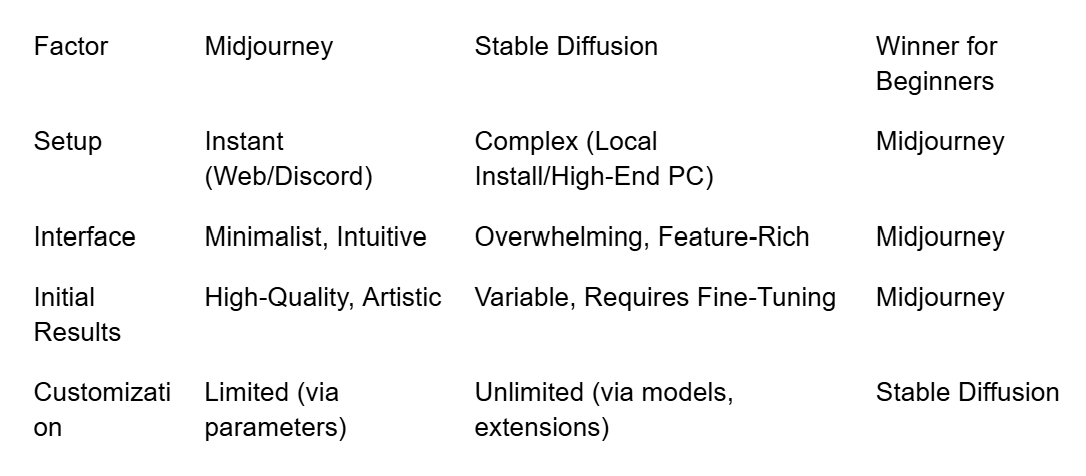

Stable Diffusion vs Midjourney for Beginners: The Learning Curve

For a newcomer to AI art, the initial experience with each platform is vastly different, directly addressing the fan-out query Stable Diffusion vs Midjourney for beginners.

Midjourney: The Fast Track to Stunning Art

Midjourney is unequivocally the more beginner-friendly option. The process is simple: subscribe, type a prompt, and receive four high-quality images in under a minute.

•Minimal Setup: No hardware requirements, no software installation, and no complex settings to adjust.

•High Success Rate: The model is so well-tuned that even a poorly written prompt often yields an aesthetically pleasing result. This instant gratification is a huge draw for new users.

•Community Learning: The Discord environment allows beginners to see the prompts and results of other users, accelerating the learning process through observation.

Stable Diffusion: The Steep Climb to Mastery

Stable Diffusion, while offering incredible power, presents a significant barrier to entry for beginners.

•Complex Setup: Running Stable Diffusion locally requires a powerful GPU (ideally 8GB VRAM or more) and a multi-step installation process involving various software dependencies. While one-click installers have simplified this, it remains a technical hurdle.

•Interface Overload: User interfaces like Automatic1111 are packed with hundreds of settings, sliders, and options (samplers, steps, CFG scale, LoRAs, embeddings, etc.). This level of control is overwhelming for a beginner who just wants to generate a simple image.

•Model Selection: The sheer number of available models and checkpoints means a beginner must first research and download the right model for their desired style, adding another layer of complexity.

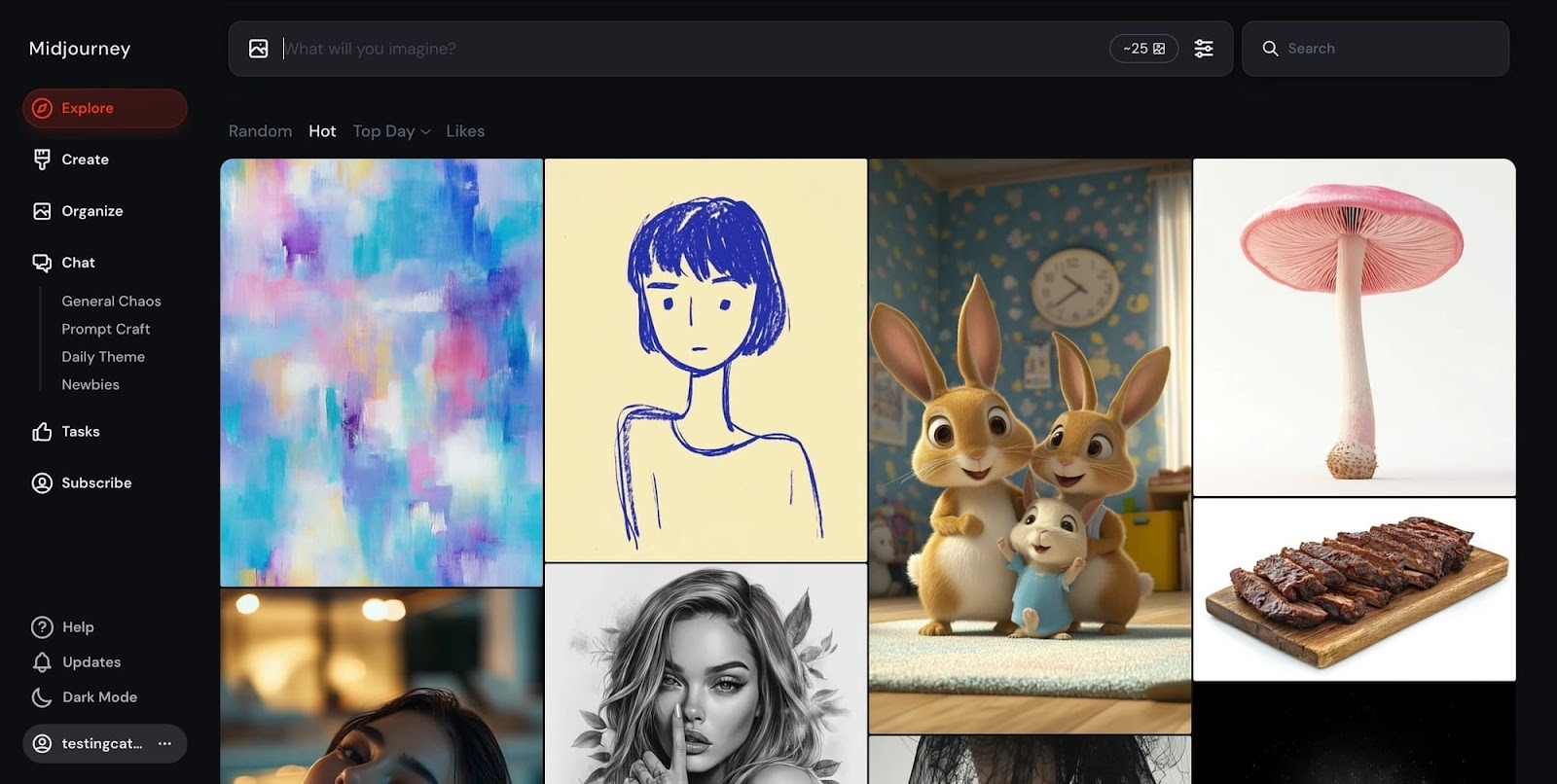

Midjourney Web Interface A screenshot of the clean, minimalist Midjourney web interface, highlighting its ease of use and focus on the final image gallery.

Stable Diffusion vs Midjourney Speed: Performance and Hardware

The fan-out query Stable Diffusion vs Midjourney speed is a complex one, as the answer depends entirely on the user's setup.

Midjourney’s Consistent Speed

Midjourney offers a consistent, high-speed generation experience because it runs on powerful, optimized server hardware.

•Fast Mode: Generates four images in under a minute, often in 15-30 seconds, depending on server load. This speed is guaranteed by the subscription model.

•Relax Mode: Offers unlimited generations at a slower, variable speed, typically taking a few minutes per image.

The speed is reliable and requires no effort from the user, making it ideal for rapid prototyping and high-volume commercial work where time is money.

Stable Diffusion’s Variable Speed

Stable Diffusion’s speed is entirely dependent on the user's hardware, specifically the GPU.

•High-End GPU: With a top-tier GPU (e.g., NVIDIA RTX 4090), Stable Diffusion can generate images faster than Midjourney, often producing a single image in just a few seconds.

•Mid-Range GPU: On a more common mid-range card (e.g., RTX 3060), generation times can range from 30 seconds to several minutes, depending on the model and settings (sampler, steps).

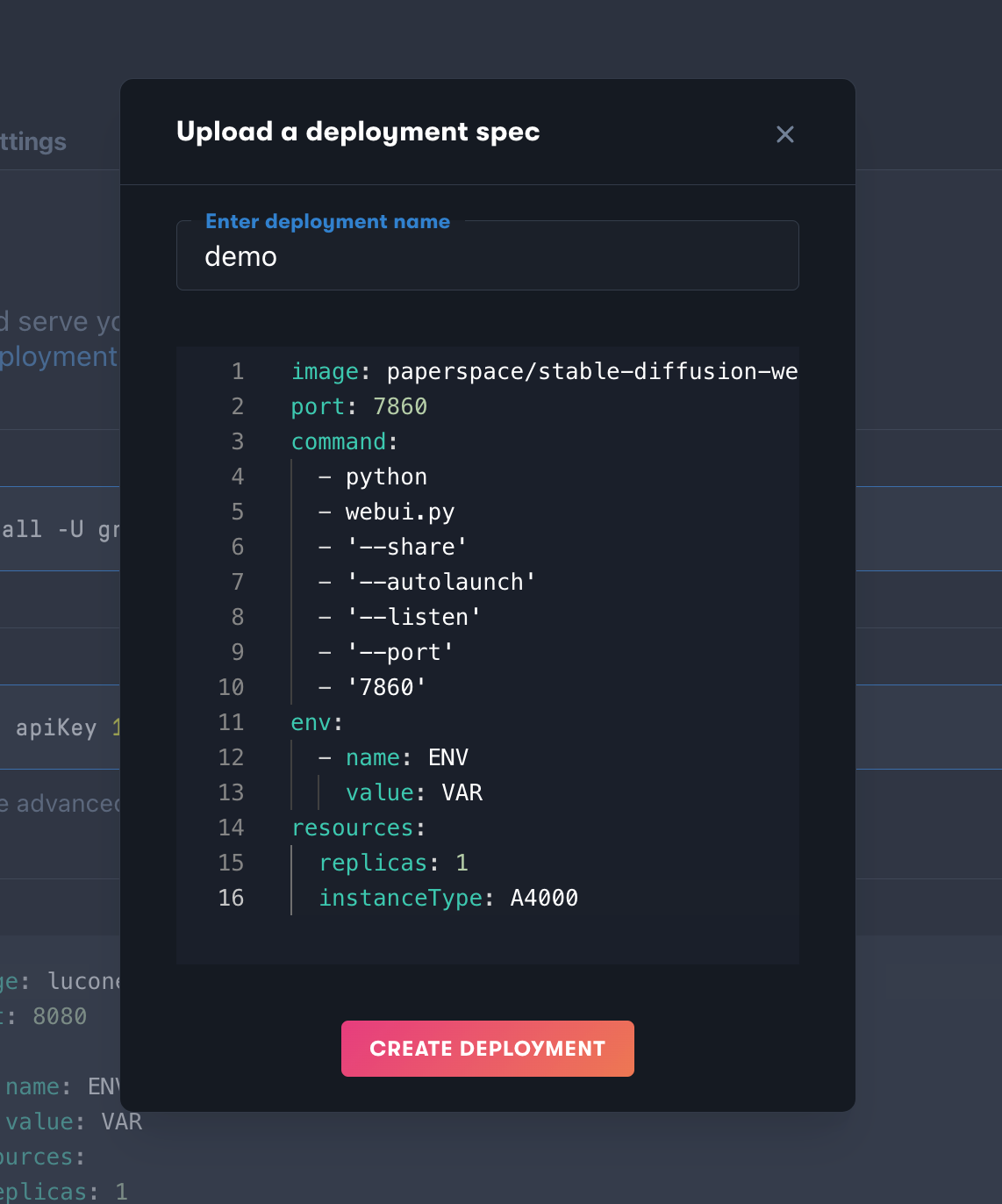

•Cloud Hosting: Users can also opt to run Stable Diffusion on cloud GPU services (like RunPod or vast.ai), which offers high speed for a pay-per-hour fee, effectively turning it into a hosted service similar to Midjourney, but with the added complexity of setup.

In a direct comparison, Midjourney offers guaranteed, fast speed for a fixed monthly fee, while Stable Diffusion offers the potential for faster speed, but only with a significant upfront hardware investment or the added complexity of cloud hosting.

The Power of Customization: Why Stable Diffusion Excels

While Midjourney excels in artistic polish, Stable Diffusion’s open-source nature gives it an overwhelming advantage in customization and control. This is the core of the "Open Source Power" argument.

ControlNet and Deterministic Art

Stable Diffusion’s ecosystem includes powerful extensions like ControlNet, which allows users to guide the image generation process with unprecedented precision. ControlNet can use an input image's pose, depth map, edges, or segmentation map to force the generated image to adhere to a specific structure. This is a game-changer for professional artists, architects, and designers who need to maintain consistency across a project or generate variations of an existing sketch or 3D model. Midjourney has introduced similar features (like --sref for style reference), but they lack the deterministic, structural control of ControlNet.

Custom Models and Fine-Tuning

The ability to fine-tune the Stable Diffusion model on a small dataset of images is invaluable for businesses and artists who need to generate images of a specific character, product, or style. This process, often done using techniques like LoRA (Low-Rank Adaptation), allows a user to create a highly personalized model that Midjourney simply cannot offer. This feature is a key reason why many developers and large studios choose Stable Diffusion despite the complexity. For a detailed guide on fine-tuning, the Hugging Face documentation is an excellent resource 1

.

Stable Diffusion WebUI Setup A screenshot of the complex, feature-rich interface of a Stable Diffusion WebUI, illustrating the vast number of settings available for advanced control.

Stable Diffusion Alternatives: The Ecosystem Beyond the Core

The open-source nature of Stable Diffusion has led to a proliferation of user-friendly interfaces and specialized models, effectively creating a vibrant ecosystem of Stable Diffusion alternatives. These alternatives often bridge the gap between the complexity of the core model and the ease of use of Midjourney.

•Automatic1111/ComfyUI: These are the most popular local Web UIs, offering the full power of Stable Diffusion with a graphical interface. They are the go-to for power users.

•Leonardo.AI: A cloud-based platform that hosts many Stable Diffusion models and offers a much simpler, more Midjourney-like experience, making it a great entry point for beginners who want access to custom models without the local setup hassle.

•Fooocus: A simplified, one-click interface that aims to make Stable Diffusion as easy to use as Midjourney, focusing on high-quality, artistic results while hiding the complexity of the underlying settings.

•DreamStudio: The official, hosted interface from Stability AI, offering a clean, credit-based system for generating images with the latest Stable Diffusion models (SDXL, SD3).

These alternatives mean that choosing Stable Diffusion is not just choosing one piece of software; it is choosing an entire, rapidly evolving ecosystem of tools and models, each tailored to a specific need.

The Verdict: Which AI Generator is Right for You?

The debate of Stable Diffusion vs Midjourney ultimately comes down to a trade-off between ease of use and artistic polish versus control and customization.

Choose Midjourney if:

1.You are a beginner or casual user: You want stunning, artistic results with zero setup and minimal effort.

2.You prioritize aesthetic quality: You value the highly polished, cinematic look that Midjourney consistently delivers.

3.You value simplicity: You prefer a fixed monthly fee and a streamlined, hosted service without worrying about hardware or software updates.

Choose Stable Diffusion if:

1.You are a developer, designer, or power user: You require granular control over the generation process, including the use of ControlNet and inpainting.

2.You need custom models: You need to fine-tune the model on your own data for character consistency, product visualization, or a unique brand style.

3.You have the hardware: You own a powerful GPU and want to generate images for free (after the initial investment) and without any content restrictions.

In the current landscape, Midjourney is the better product, offering a superior user experience and a more consistent artistic output. However, Stable Diffusion is the better technology, offering the raw power and flexibility that drives the entire industry forward. The open-source nature of Stable Diffusion ensures that its capabilities will continue to expand at a pace that proprietary models struggle to match.

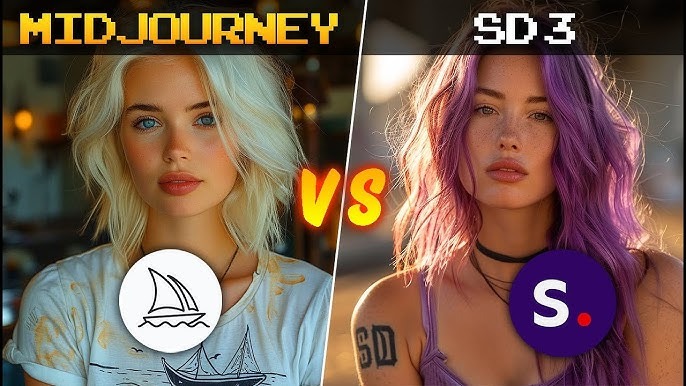

Image 3: Stable Diffusion vs Midjourney Art Comparison A visual comparison of the artistic output of both platforms, highlighting Midjourney's artistic flair and Stable Diffusion's versatility.

Deep Dive: The Open-Source Advantage in a Proprietary World

The open-source nature of Stable Diffusion is not just a technical detail; it is a profound philosophical advantage that ensures its long-term relevance.

The core benefit is auditability and transparency. Because the code is public, researchers and developers can inspect the model, understand how it works, and identify potential biases or flaws. This level of transparency is impossible with proprietary models like Midjourney. Furthermore, the open-source community acts as a massive, decentralized R&D department, constantly pushing the boundaries of what the model can do. Every new feature, from ControlNet to various upscalers and samplers, is a direct result of this community effort.

This decentralized innovation means that Stable Diffusion often adopts new features and techniques faster than its proprietary counterparts. While Midjourney is forced to play catch-up by integrating community-developed concepts into its closed system, Stable Diffusion users are often the first to benefit from the latest breakthroughs. For a deeper look into the open-source AI movement, the work of the EleutherAI collective is highly recommended 2

.

The Future: Convergence and Coexistence

The future of AI image generation is not a winner-take-all scenario. Instead, we are seeing a convergence where both platforms borrow from each other's strengths. Midjourney is developing a more feature-rich web interface to simplify its workflow, while Stable Diffusion alternatives are making the open-source model more accessible to beginners.

Ultimately, the choice between Stable Diffusion vs Midjourney will continue to be a reflection of the user's values: do you value the polished, curated experience of a proprietary service, or the raw, untamed power of an open-source ecosystem? Both are essential to the growth of the AI art space, and both will continue to push the boundaries of creativity for years to come.

Stable Diffusion vs Midjourney Prompt Comparison A side-by-side comparison of the prompt structure and output, showing how Midjourney's model interprets simple prompts more artistically, while Stable Diffusion requires more explicit detail.

Stable Diffusion 3 vs Midjourney Comparison A visual comparison of the latest models, Stable Diffusion 3 and Midjourney V6, highlighting the current state of the art in both camps.

For those interested in the technical details of the underlying diffusion models, a comprehensive overview of the architecture is available on the Stability AI blog 3

. If you are looking to get started with the Stable Diffusion WebUI, a detailed setup guide can be found on the GitHub repository 4

. Finally, for a broader perspective on the best AI tools for content creation in 2025, a comprehensive industry report offers excellent insights 5