Stable Diffusion vs Midjourney for Anime: The Ultimate AI Art Showdown

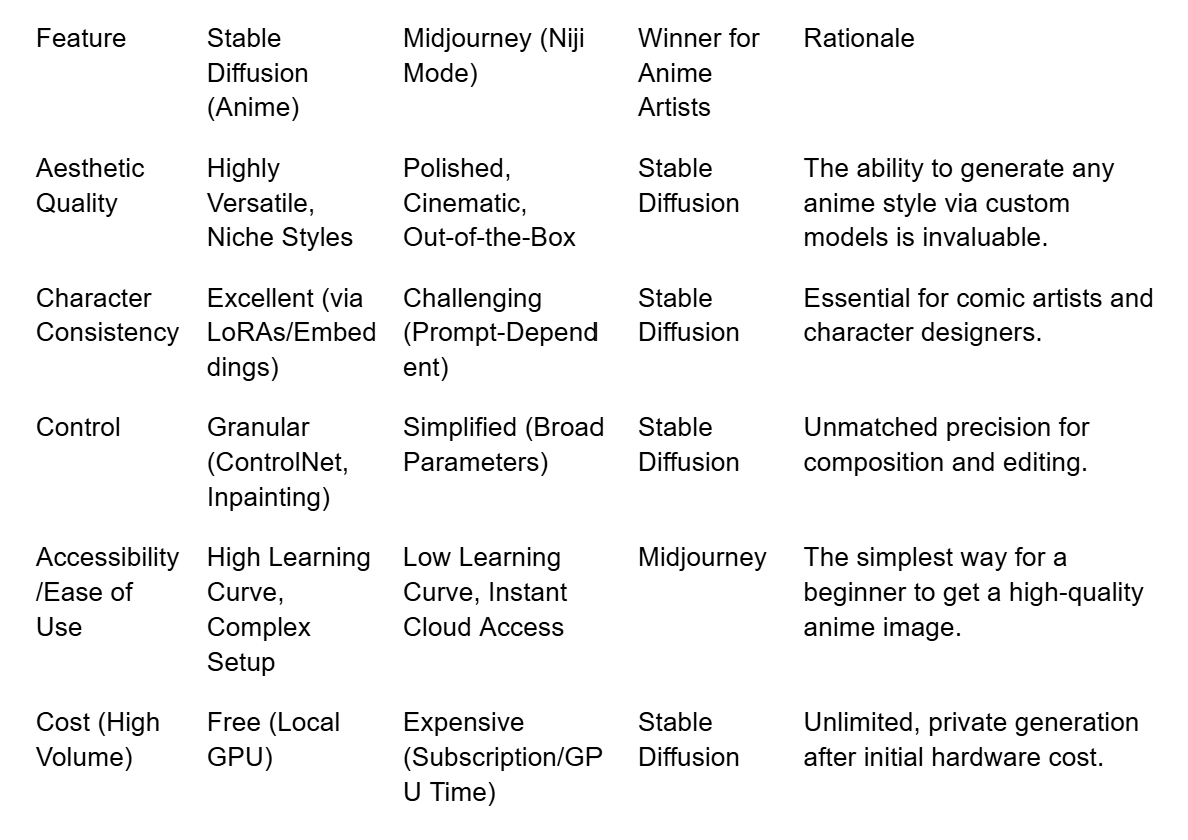

The world of AI image generation has been revolutionized by two titans: Stable Diffusion and Midjourney. While both are capable of producing stunning visuals across countless styles, the niche of anime art presents a unique and fiercely contested battleground. For artists, illustrators, and enthusiasts looking to create manga panels, character designs, or stylized backgrounds, the choice between these two platforms is a critical one, hinging on a fundamental trade-off: ultimate control and customization versus polished, out-of-the-box aesthetic quality.

This comprehensive 2500-word analysis will dive deep into the specific features, workflows, and communities that define the contest between Stable Diffusion vs Midjourney for Anime. We will explore how Midjourney’s proprietary Niji mode delivers instant, high-fidelity results, and how Stable Diffusion’s open-source ecosystem, powered by custom models and LoRAs, offers unparalleled flexibility and character consistency. By the end, you will have a clear understanding of which tool is best suited to your specific anime art goals in 2025.

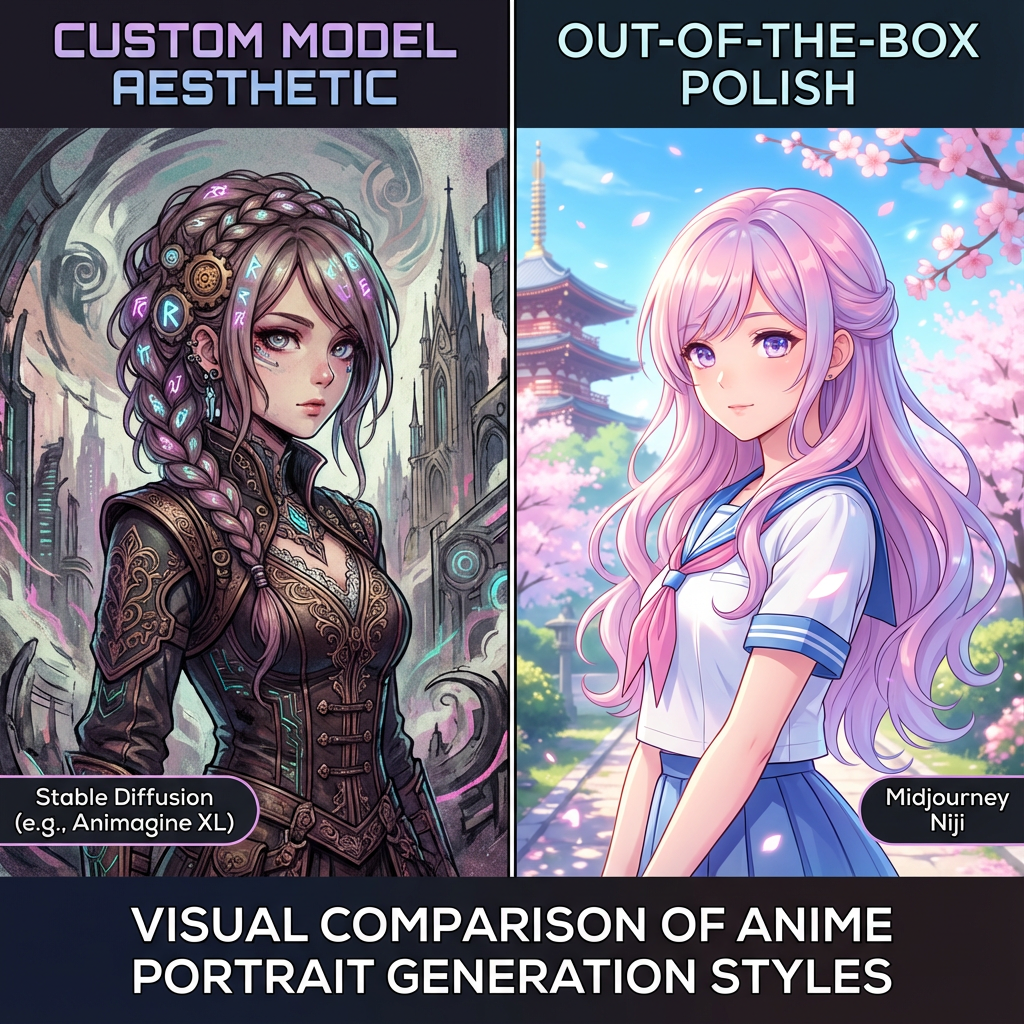

1. The Aesthetic Showdown: Polish vs. Customization

The first and most immediate difference between the two platforms lies in the visual output they produce for anime art.

Midjourney’s Niji Mode: Out-of-the-Box Polish

Midjourney’s dedicated Niji mode (currently Niji 6) is specifically trained on anime, manga, and illustrative art. It is designed to deliver a highly polished, aesthetically pleasing result with minimal effort.

•Strengths: Niji mode excels at generating beautiful, cinematic, and high-fidelity anime images that often look like they were created by a professional studio. It handles lighting, composition, and color palettes with exceptional skill, requiring only simple prompts to achieve stunning results.

•Limitations: The aesthetic, while beautiful, is largely dictated by the model. While you can use style parameters (--style cute, --style expressive), the core visual signature remains consistent. Achieving a niche, retro, or highly specific mangaka style can be difficult or impossible.

Stable Diffusion: Custom Model Versatility

Stable Diffusion’s strength in the anime space comes from its open-source nature, which has fostered an ecosystem of specialized, community-trained models.

•Strengths: Platforms like Civitai host thousands of custom models (e.g., Animagine XL 3.0, Anything V5, AbyssOrangeMix) that are fine-tuned on specific anime styles, from classic 90s cel-shading to modern, highly detailed digital painting. This allows users to generate art that perfectly matches a niche aesthetic.

•Limitations: Achieving a high-quality result requires the user to select the right model, the right sampler, and often combine it with other tools like LoRAs, making the initial learning curve significantly steeper.

A visual comparison of two anime portraits. On the left, a portrait generated with a highly-tuned Stable Diffusion model (like Animagine XL) showing extreme detail and a specific niche style. On the right, a portrait generated with Midjourney's Niji mode, showing a clean, polished, and highly aesthetic style. Label the left 'Custom Model Aesthetic' and the right 'Out-of-the-Box Polish'.

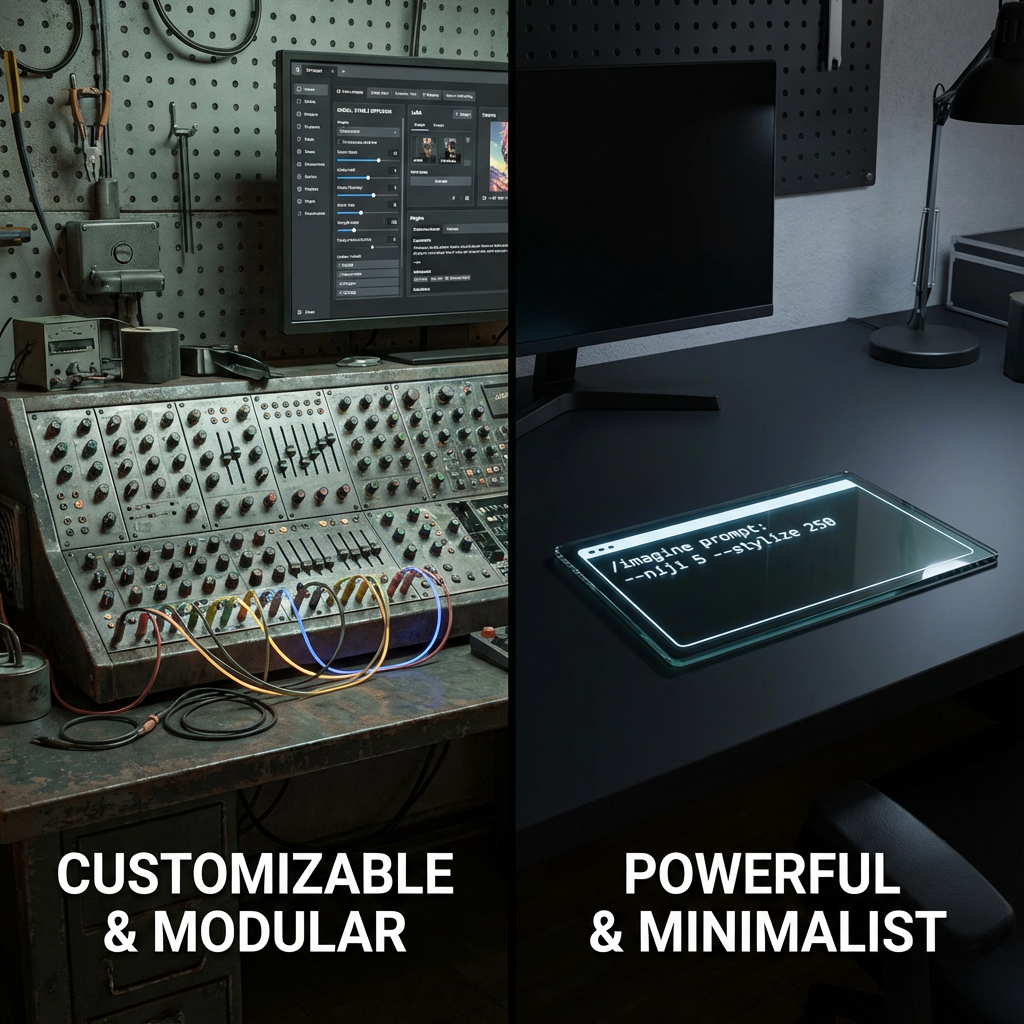

2. The Control Factor: Granular Tools vs. Simplified Parameters

For an anime artist, control over composition, pose, and character features is paramount. This is where the core philosophical difference between Stable Diffusion vs Midjourney for Anime becomes most apparent.

Stable Diffusion: Granular, Modular Control

Stable Diffusion offers an unparalleled level of control through its modular architecture.

•LoRAs (Low-Rank Adaptation): These small files can be trained on a handful of images to inject a specific character, clothing item, or micro-style into the generation. This is the key to achieving character consistency and is a game-changer for comic artists and character designers 1

.

•ControlNet: This powerful tool allows users to upload an image (e.g., a sketch, a line drawing, a pose reference) and force the AI to adhere to its composition, pose, or depth map. This is essential for artists who need to maintain a consistent layout across a series of panels or illustrations.

•Inpainting/Outpainting: SD’s local UIs (like Automatic1111 or ComfyUI) offer robust inpainting and outpainting tools, allowing for pixel-level manipulation and non-destructive editing of specific image areas.

Midjourney: Powerful, Simplified Parameters

Midjourney’s control is powerful but limited to a few core parameters that are applied globally to the prompt.

•Niji Parameters: Users can adjust parameters like --style, --s (stylize), and --c (chaos) to influence the final image. While effective, these are broad strokes compared to the surgical precision offered by Stable Diffusion’s tools.

•Vary (Region): Midjourney has introduced regional editing, allowing users to select an area and re-prompt it. However, this is still a closed-box feature compared to the open-source flexibility of SD’s inpainting.

A visual metaphor comparing control interfaces. On the left, a complex, modular control panel with many slots for plugins and settings (representing Stable Diffusion's LoRA and model control). On the right, a sleek, minimalist command line interface with a few powerful parameters (representing Midjourney's Niji mode parameters).

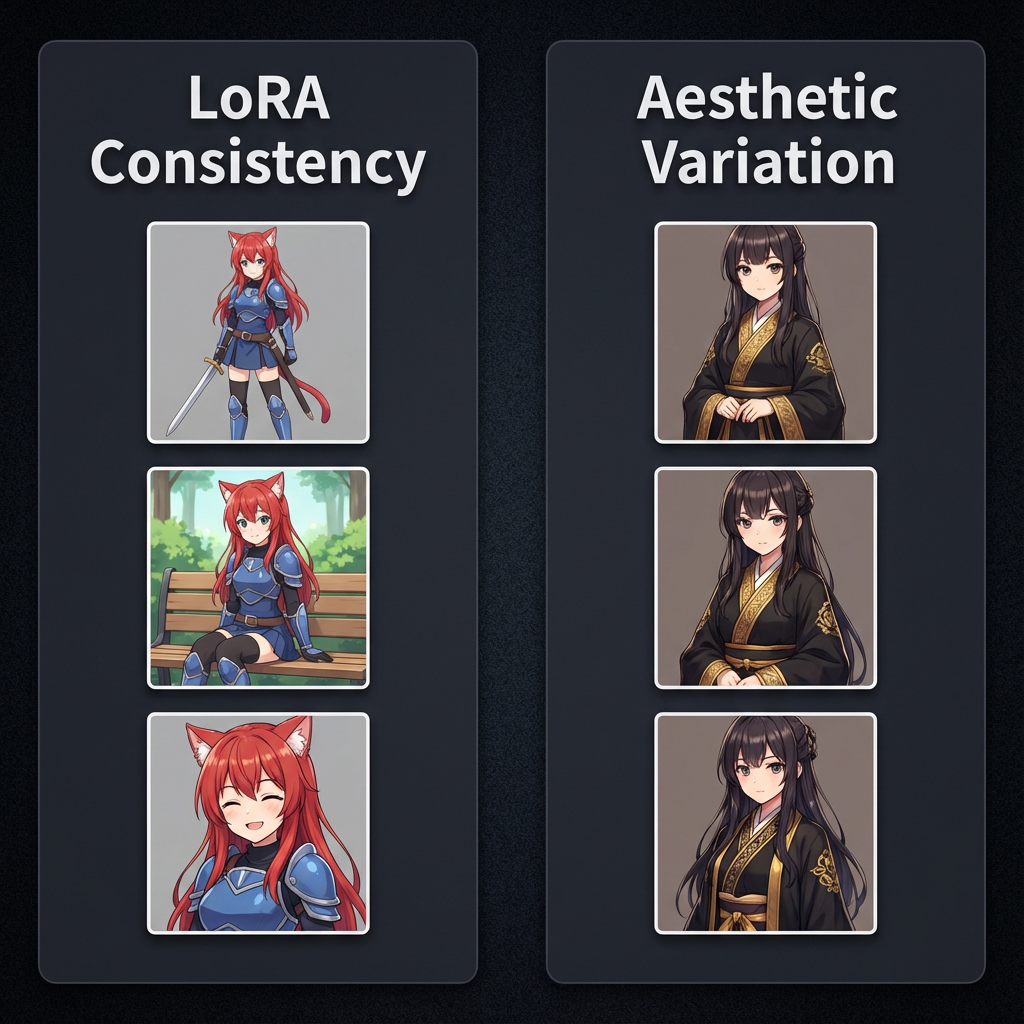

3. Achieving Character Consistency: The LoRA Advantage

One of the most challenging aspects of AI art is generating the same character in different poses, outfits, and scenes—a necessity for any anime or manga project.

Stable Diffusion: The Consistency Champion

Stable Diffusion vs Midjourney for Anime is a clear win for SD when it comes to character consistency, thanks to LoRAs.

•LoRA Training: An artist can train a LoRA on a small dataset of their original character. Once trained, this LoRA can be used across any Stable Diffusion model to reliably generate that character, maintaining their facial features, hair, and clothing details with high fidelity 2

.

•Textual Inversion/Embeddings: Similar to LoRAs, these tools allow users to teach the model a new concept (like a specific character) using a trigger word, further enhancing consistency.

Midjourney: The Prompting Challenge

Midjourney relies heavily on the quality and detail of the prompt to maintain consistency.

•Prompt Engineering: Users must meticulously describe the character's features in every prompt, often using a "character sheet" approach to ensure details are not lost. While the latest versions have improved, subtle variations in facial structure, eye color, or hair length are common across different generations.

•Style Consistency: Midjourney excels at maintaining a consistent style (e.g., "lofi anime art" or "Ghibli style"), but struggles with the identity of a specific, original character.

A visual comparison showing character consistency. On the left, a series of three images of the same anime character generated with Stable Diffusion and a LoRA, showing high consistency. On the right, a series of three images of a similar anime character generated with Midjourney, showing high aesthetic quality but subtle variations in character features.

4. The Ecosystem and Community: Open-Source Modularity vs. Closed-Source Polish

The surrounding community and available resources are vital for any AI artist.

Stable Diffusion: The Open-Source Hub

Stable Diffusion is the heart of a massive, open-source ecosystem driven by community contributions.

•Civitai and Hugging Face: These platforms are central repositories for thousands of free, community-trained resources, including custom anime models, LoRAs, Textual Inversions, and prompt guides. This modularity means the tool is constantly evolving and adapting to the latest anime trends.

•Knowledge Sharing: The community is highly technical, with users sharing detailed workflows, training data, and scripts, making it a powerful learning environment for those willing to dive into the technical details.

Midjourney: The Curated Gallery

Midjourney’s community is centered around its Discord server and official website gallery.

•Curated Aesthetic: The focus is on sharing polished, final results. The community is excellent for inspiration and seeing the cutting edge of AI art, but the underlying technical details (the "how") are proprietary and not shared.

•Ease of Use: The community is highly accessible, making it easy for beginners to jump in and start generating immediately without needing to download or manage external files.

A visual metaphor comparing communities. On the left, a busy, open-source coding environment with many developers sharing files and models (representing Stable Diffusion's community on Civitai/Hugging Face). On the right, a vibrant, well-organized Discord server with users sharing polished final images (representing Midjourney's community).

5. Workflow and Accessibility: Local Power vs. Cloud Simplicity

The way you access and use the tools is a major differentiator in the Stable Diffusion vs Midjourney for Anime debate.

Stable Diffusion: The Local Powerhouse

Stable Diffusion is primarily designed to be run locally on a user's computer, though cloud services are available.

•Local Workflow: Running SD locally (via UIs like Automatic1111 or ComfyUI) requires a powerful GPU (minimum 8GB VRAM is recommended). This gives the user complete control, privacy, and the ability to generate images without paying per-image fees. However, the setup and maintenance are complex 3

.

•Flexibility: The local setup allows for complex, multi-step workflows, such as generating a base image, using ControlNet to fix the pose, and then using Inpainting to refine details—all in a single, integrated environment.

Midjourney: The Cloud-Based Simplicity

Midjourney is exclusively a cloud-based service, accessed primarily through Discord or its web interface.

•Cloud Workflow: The process is simple: type a prompt, and the image is generated on Midjourney’s servers. This requires no technical setup and is accessible from any device.

•Speed and Convenience: This simplicity is its greatest strength, allowing for rapid iteration and sharing, but it means the user is entirely dependent on Midjourney’s servers and proprietary tools.

A visual comparison of the workflow. On the left, a complex desktop setup with multiple windows open for local generation, model management, and inpainting (representing Stable Diffusion's local workflow). On the right, a clean, cloud-based interface with a simple text prompt box (representing Midjourney's cloud-based workflow).

6. Pricing and Commercial Use

The cost structure and commercial rights are vital considerations for professional artists.

Midjourney’s Subscription Model

Midjourney operates on a subscription model, where users pay a monthly fee for a set amount of "Fast GPU Time" 4

.

•Cost: Plans range from Basic ($10/month) to Pro ($60/month), with the main difference being the amount of fast generation time and the ability to run "Relaxed" (slower, but unlimited) generations.

•Commercial Rights: Paid subscribers retain full commercial rights to their generated images, making it a straightforward choice for artists selling prints or using the art in commercial projects.

Stable Diffusion’s Cost Structure

Stable Diffusion’s cost is more complex, depending on the chosen workflow.

•Local Cost: The primary cost is the initial investment in a powerful GPU. Once the hardware is purchased, generation is essentially free, offering unlimited, private, and high-speed generation.

•Cloud Cost: If running on a cloud service (like RunPod or vast.ai), the cost is pay-per-hour for GPU time, which can be highly cost-effective for short bursts of intense work.

•Commercial Rights: The base Stable Diffusion models (like SDXL) are open-source with permissive licenses, generally allowing for commercial use, though users must be mindful of the licenses of any custom models or LoRAs they download from community sites.

6.3. Legal and Ethical Considerations for Anime Art

The choice between Stable Diffusion vs Midjourney for Anime also carries significant legal and ethical weight, particularly concerning the use of the generated art for commercial purposes.

Midjourney offers a clear, albeit proprietary, commercial license for its paid users. The company has a strict policy against generating explicit or suggestive content, which is enforced by content filters. While this provides a degree of brand safety, the lack of transparency regarding its training data—which is known to include copyrighted anime and manga—remains a point of contention for some artists and corporations.

Stable Diffusion, being open-source, shifts the legal and ethical burden entirely to the user. The base models are generally considered safe for commercial use, but the vast majority of highly-specialized anime models and LoRAs are trained on copyrighted fan art, specific mangaka styles, or even direct character art. While this allows for incredible stylistic fidelity, it exposes the user to potential legal risks if the generated art is used commercially without proper due diligence on the model's training data and license. For professional anime artists, this requires a deep understanding of the specific model licenses (e.g., CreativeML Open RAIL-M) and a careful approach to avoid direct infringement. This level of user responsibility is a key distinction from the more curated and legally simplified environment of Midjourney.

7. Technical Deep Dive: Niji 6 vs. Animagine XL 3.0

To truly compare Stable Diffusion vs Midjourney for Anime, we must look at the state-of-the-art models in each camp.

Midjourney’s Niji 6

The latest Niji model, Niji 6, features significant improvements over its predecessors, particularly in:

•Japanese Text Rendering: Niji 6 has improved its ability to render Japanese text (kana and kanji) accurately within the image, a crucial feature for manga and illustrative work 5

.

•Stylistic Range: It offers a wider range of styles and better adherence to complex prompts, though it still maintains its signature polished look.

Stable Diffusion’s Animagine XL 3.0

Animagine XL 3.0 is one of the most popular and advanced community-trained anime models built on the SDXL architecture.

•Enhanced Anatomy: It boasts superior image generation with notable improvements in hand anatomy and overall character structure, addressing a common weakness in earlier AI models.

•Tag Efficiency: It is engineered to understand complex tag ordering and prompting strategies, allowing for highly specific control over the final image's details and composition.

8. Final Verdict: Choosing Your Anime AI Partner

The choice between Stable Diffusion vs Midjourney for Anime is not a matter of which is objectively "better," but which is better for your specific workflow and goals.

Choose Midjourney (Niji Mode) if:

•You are a beginner or hobbyist who wants instant, high-quality, aesthetically pleasing anime art with minimal effort.

•You prioritize speed and simplicity over granular control and character consistency.

•You are comfortable with a subscription model and cloud-based workflow.

Choose Stable Diffusion if:

•You are a professional artist, comic creator, or character designer who requires absolute control over character consistency, pose, and niche art styles.

•You are willing to invest time in learning complex workflows (LoRAs, ControlNet) and/or money in a powerful local GPU.

•You value the open-source ecosystem and the ability to generate images privately and without per-image costs.

For the dedicated anime artist whose work depends on consistent characters and specific styles, Stable Diffusion vs Midjourney for Anime is a contest won by the open-source platform's modularity and control. However, for the casual user, Midjourney remains the most accessible path to beautiful anime art.